Getting To Know K8s | Lab #10: Setup Kubernetes Federation Between Clusters in Different AWS Regions

This post was updated on September 18th, 2017 for Kubernetes version 1.7.6 & Kops version 1.7.0

Introduction

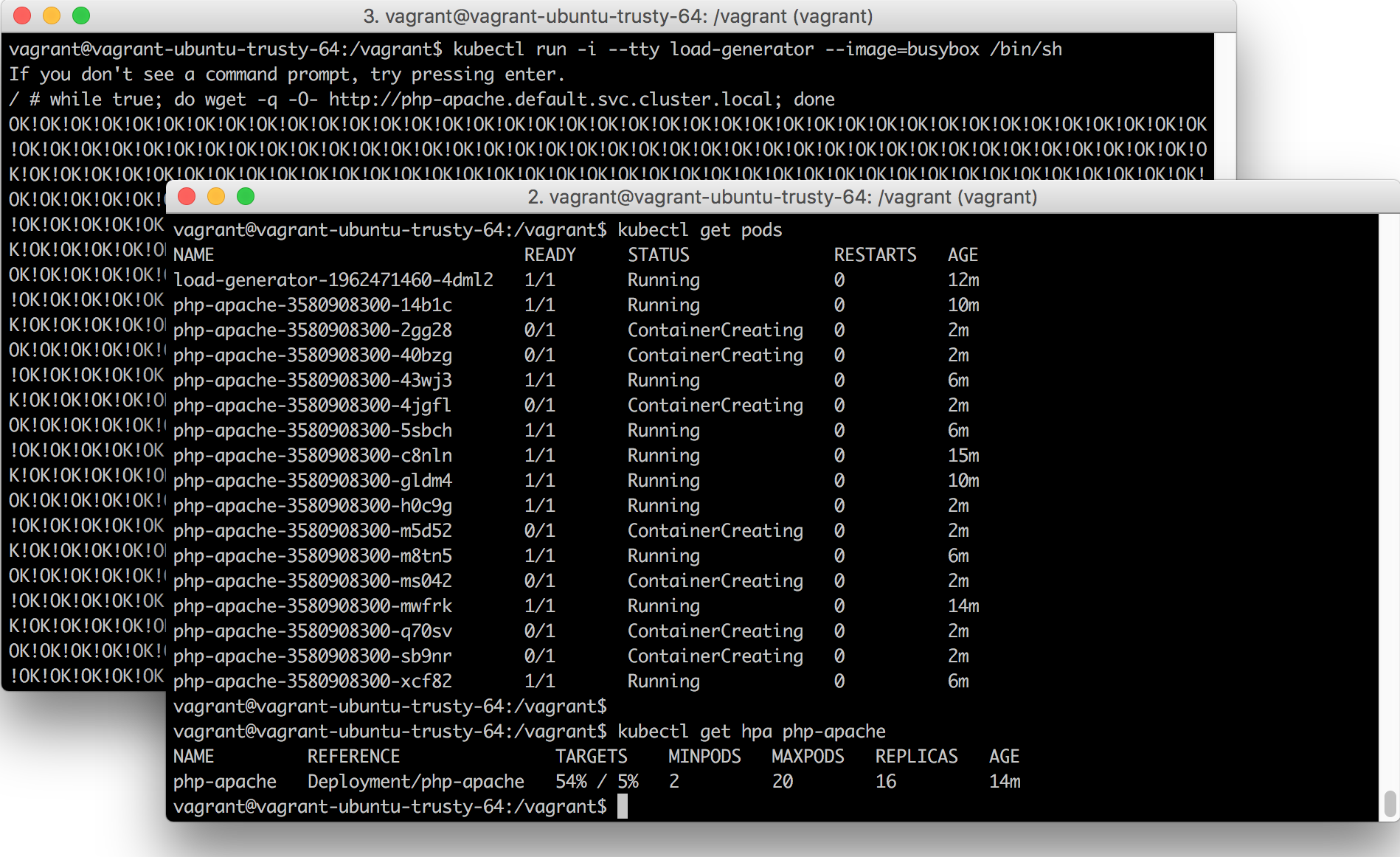

Kubernetes Cluster Federation, which was first released in version 1.3 back in July 2016, allows you to federate multiple clusters together and then control them as a single entity using a Federation control plane. Federation supports adding clusters located in different regions within the same cloud provider network, clusters spanning across multiple cloud providers and can even include on-premise clusters.

Some of the use cases for Federation are:

- Geographically Distributed Deployments: Spread Deployments across clusters in different parts of the world

- Hybrid Cloud: Extend Deployments from on-premise clusters to the cloud

- Higher Availability: Ability to federate clusters across different regions/cloud providers

- Application Migration: Simplify the migration of applications from on-premise to the cloud or between cloud providers

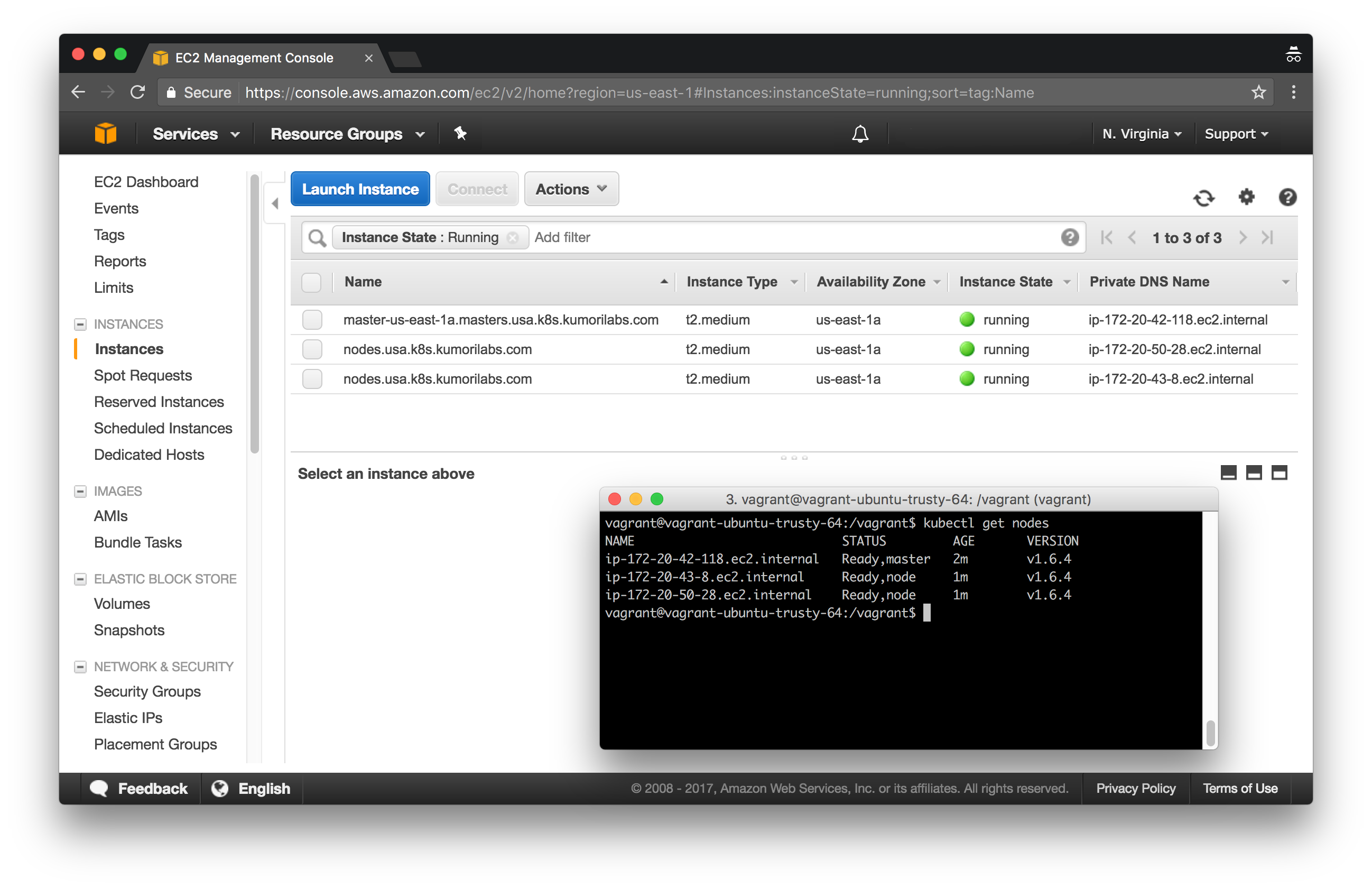

In this lab, we will deploy clusters in three different AWS regions:

- USA: N. Virgina (us-east-1)

- Europe: Ireland (eu-west-1)

- Japan: Tokyo (ap-northeast-1)

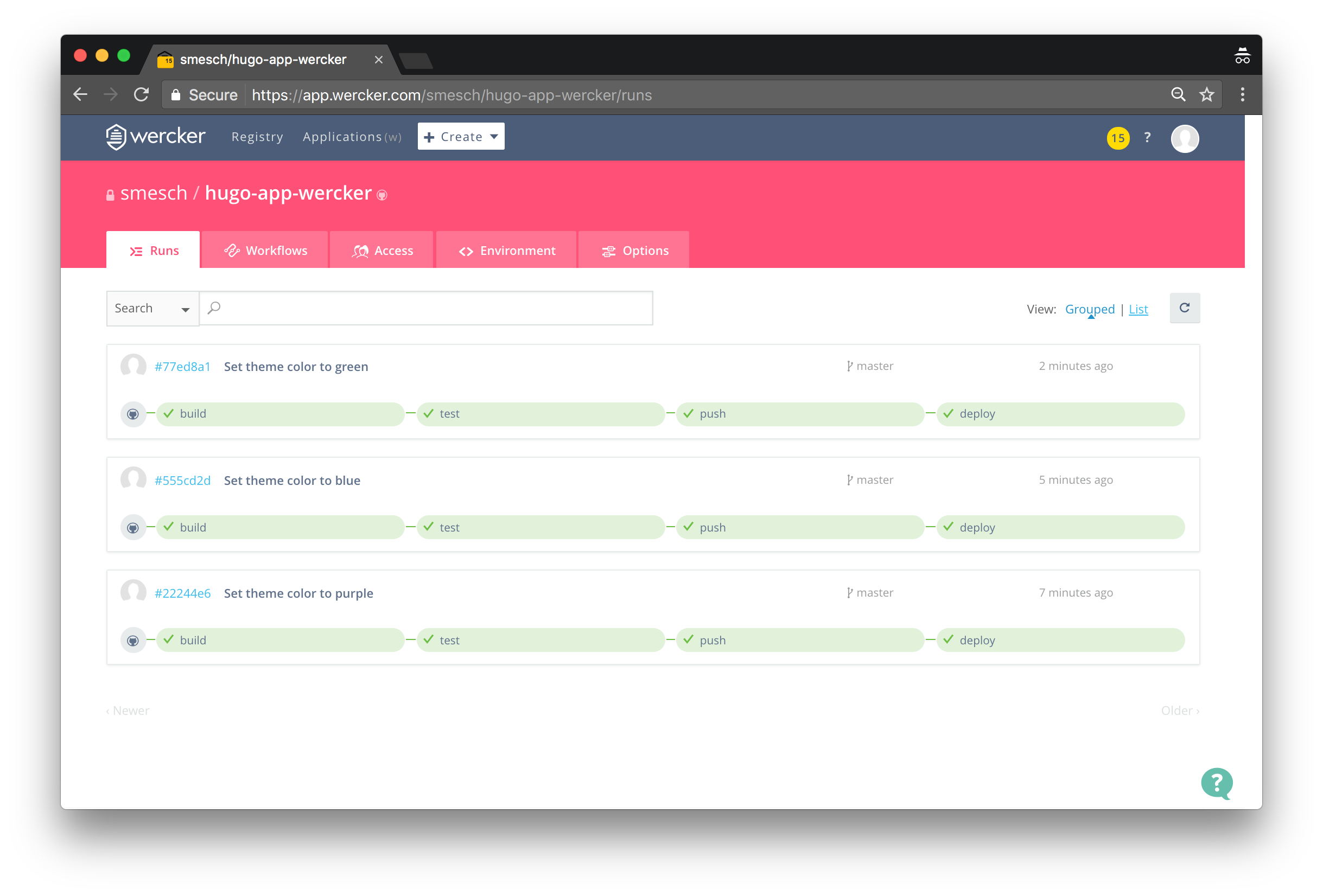

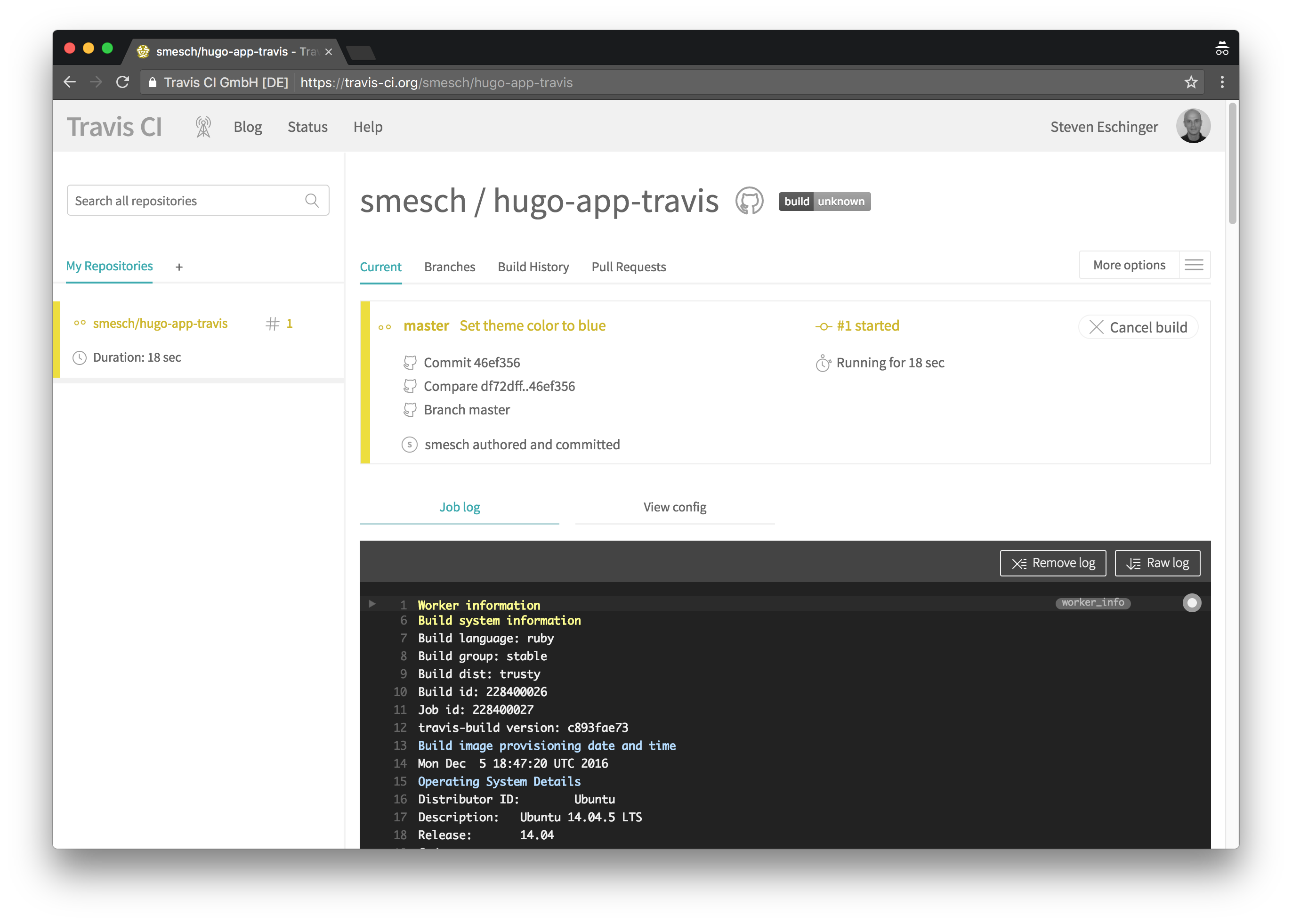

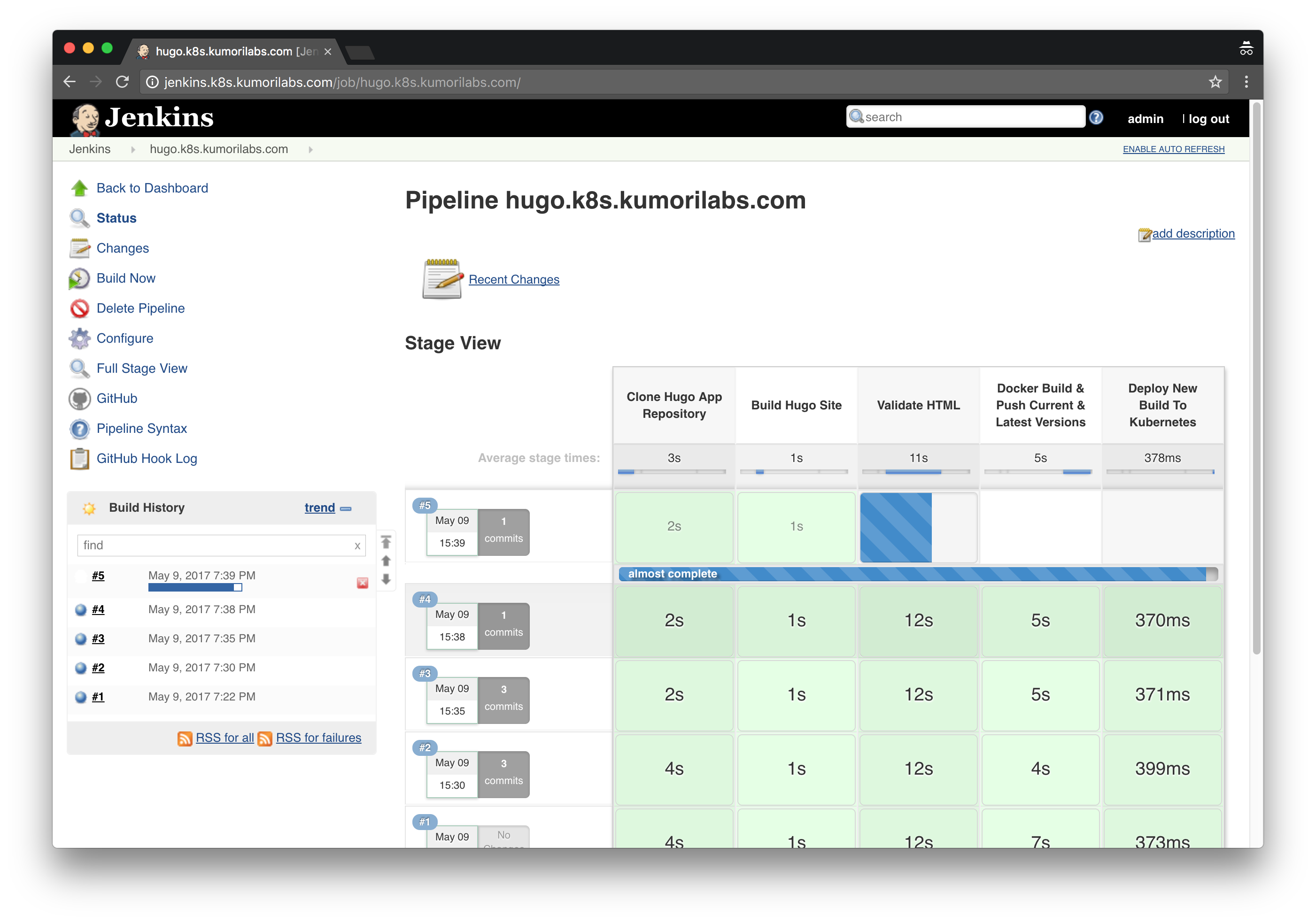

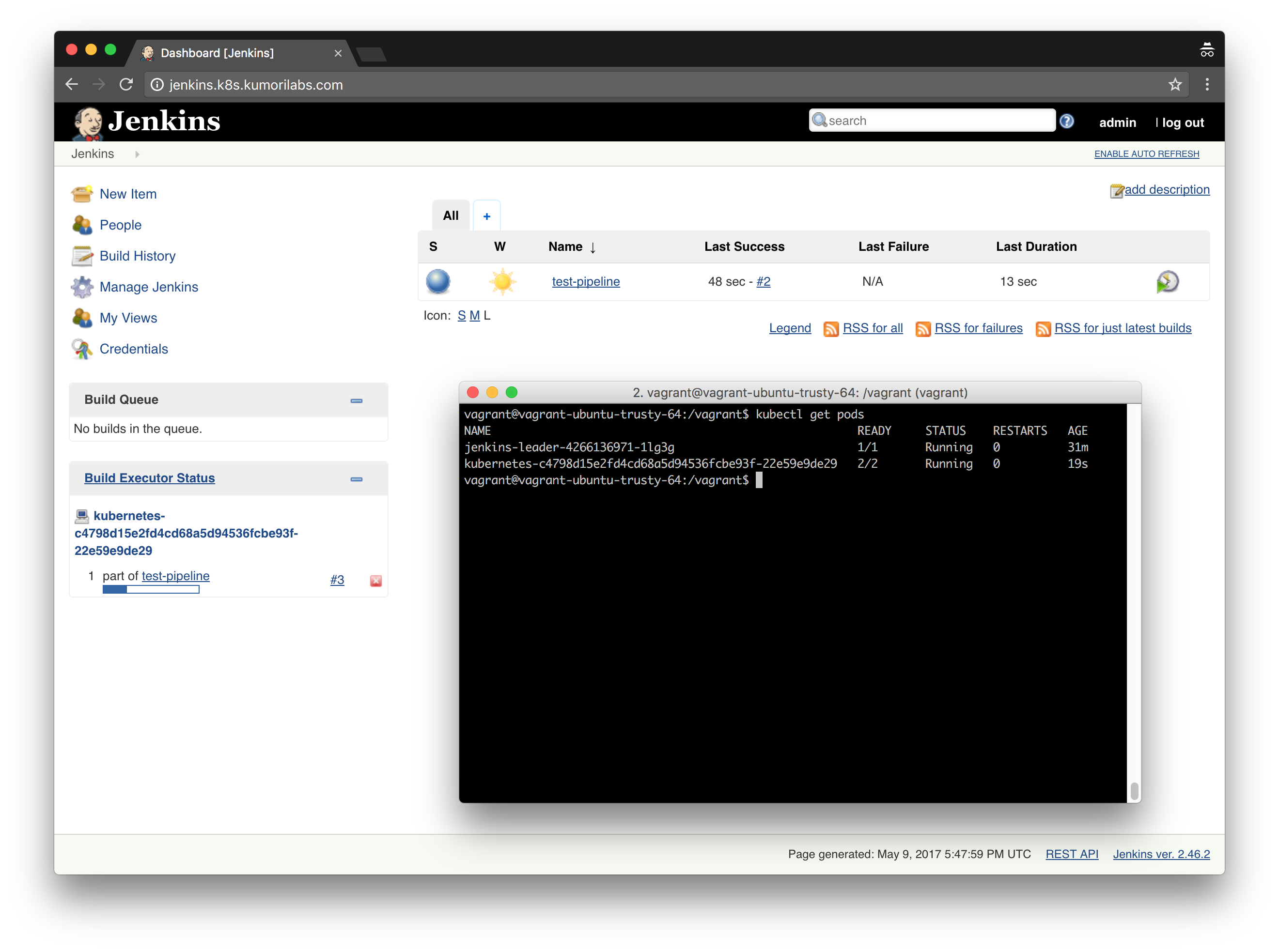

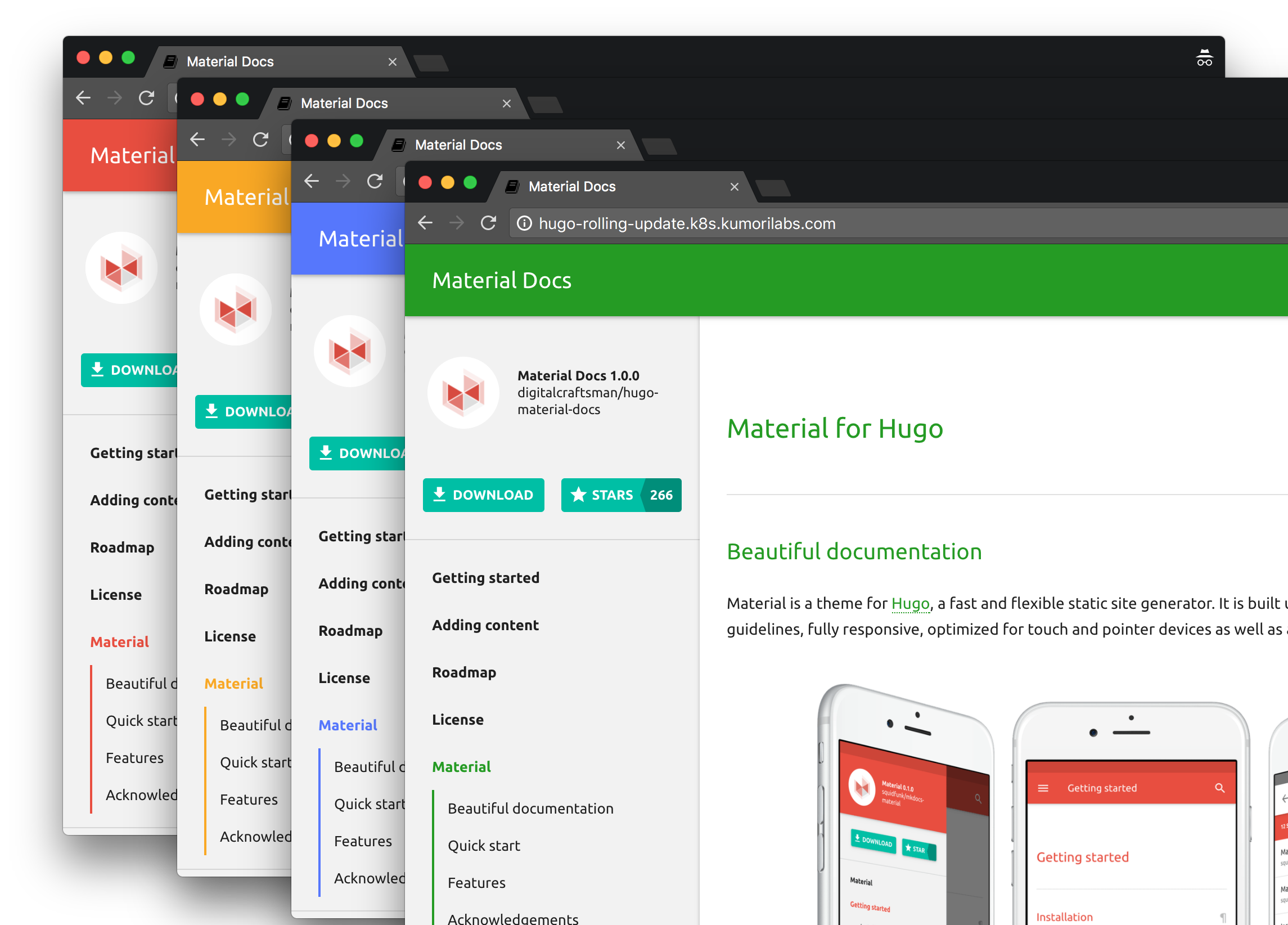

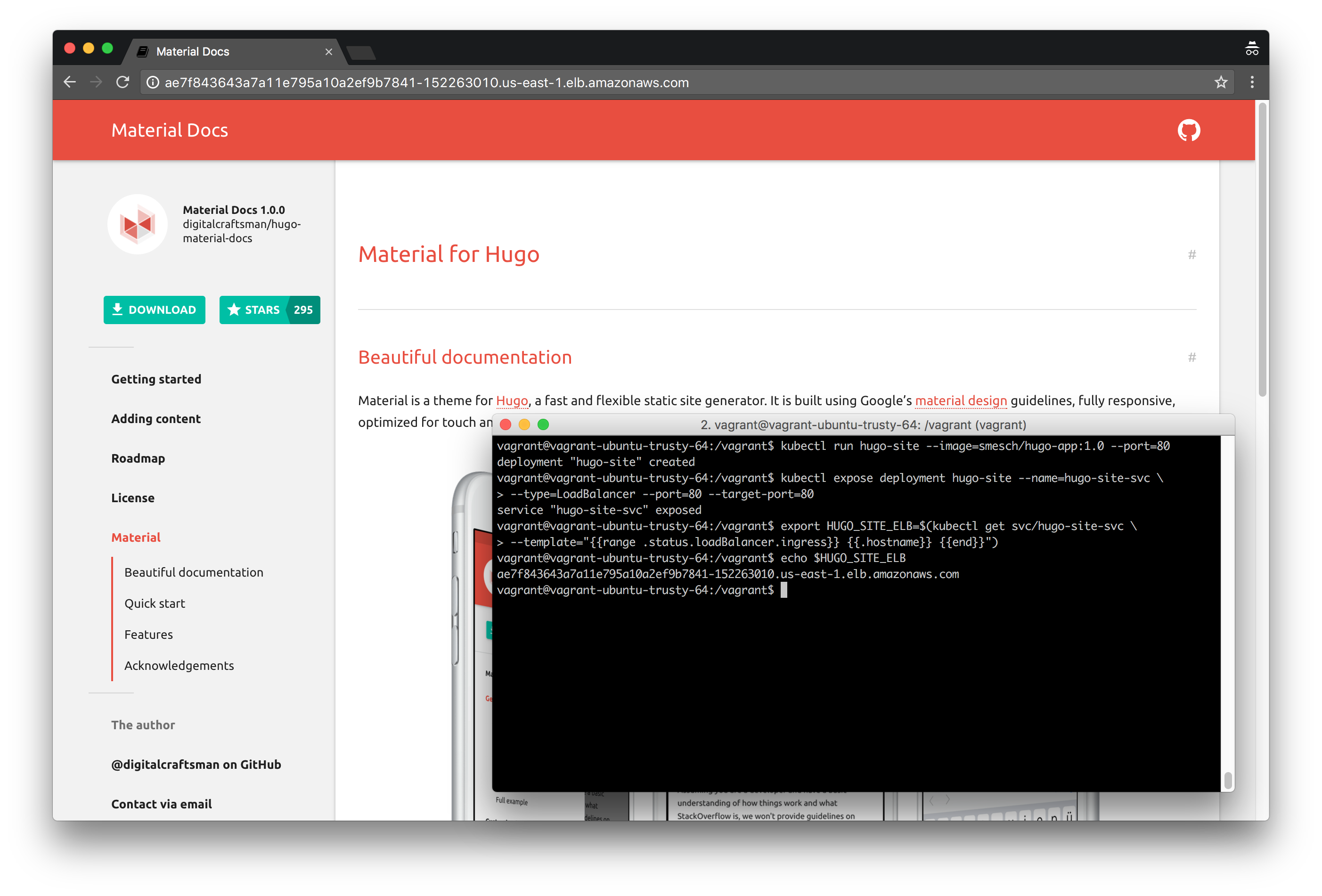

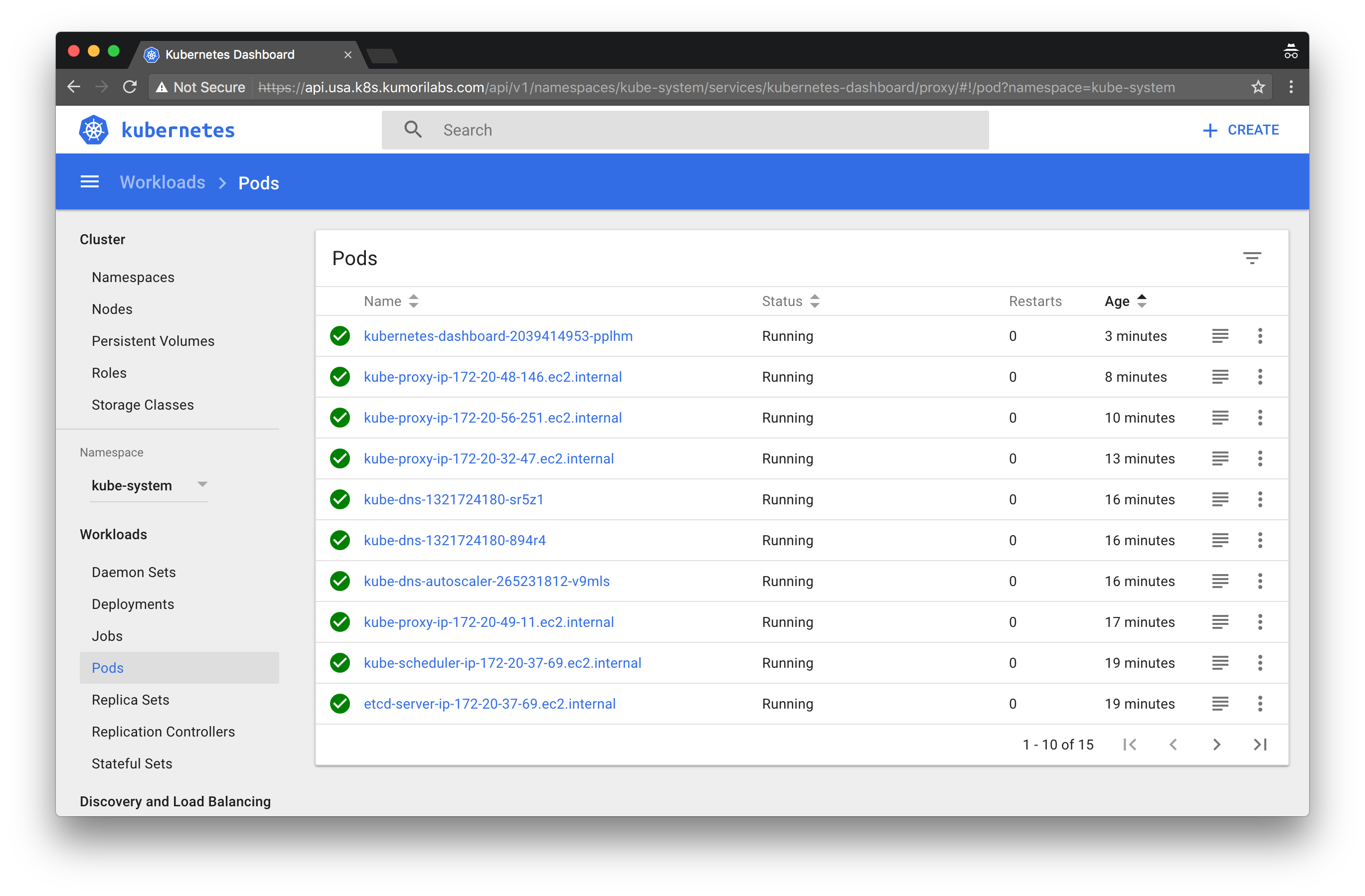

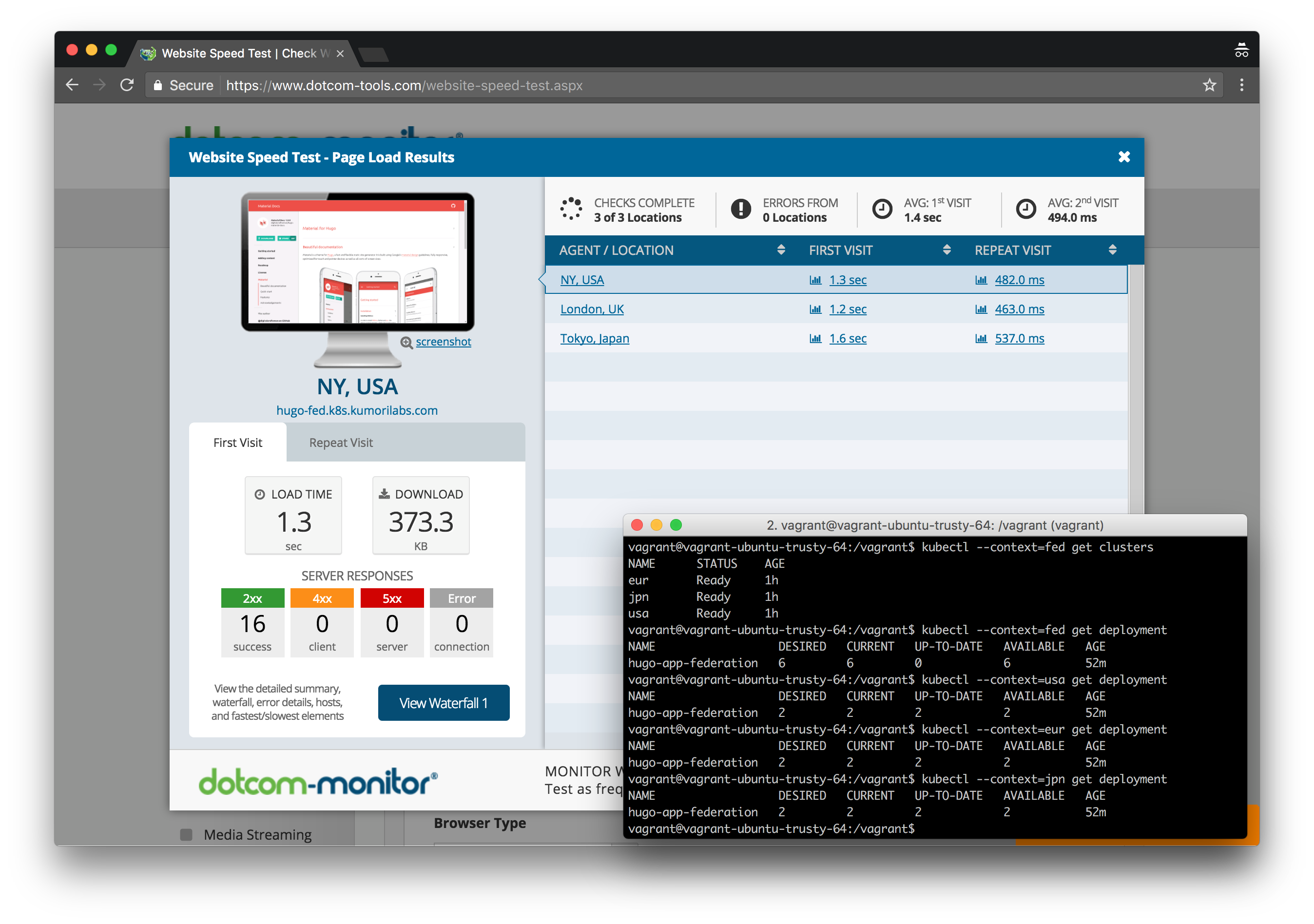

We will deploy the Federation control plane to the USA cluster (host cluster) and then add all three clusters to the Federation. We will then create a federated Deployment for the same Hugo site we have used in previous labs. By default, the Pods for the federated Deployment will be spread out evenly across the three clusters.

And finally, we will create latency-based DNS records in Route 53, one for each cluster region. This will result in a globally distributed Deployment where end users are automatically routed to the nearest cluster based on proximity.