By Steven Eschinger | April 28, 2017

This post was updated on September 18th, 2017 for Kubernetes version 1.7.6 & Kops version 1.7.0

Introduction

In the previous two labs, we created an example continuous deployment pipeline for a Hugo site, using both Jenkins and Travis CI. And in this lab, we will be recreating the same continuous deployment pipeline using Wercker.

Wercker is similar to Travis CI as it is also a hosted continuous integration service. One difference is that Wercker uses a concept called Steps, which are self-contained bash scripts or compiled binaries used for accomplishing specific automation tasks. You can create custom steps on your own or use existing steps from the community via the Steps Registry. And as of now, Wercker is free to use for both public and private GitHub repositories (Travis CI is free for only public repositories).

The Wercker pipeline we will create will cover the same four stages that the Jenkins & Travis CI pipelines did in the previous labs:

- Build: Build the Hugo site

- Test: Test the Hugo site to confirm there are no broken links

- Push: Create a new Docker image with the Hugo site and push it to your Docker Hub repository

- Deploy: Trigger a rolling update to the new Docker image in your Kubernetes cluster

The configuration of the pipeline will be defined in a wercker.yml file in your Hugo site GitHub repository, similar to the Jenkinsfile & .travis.yml file used in the previous labs.

And as Wercker is tightly integrated with GitHub, the pipeline will be automatically run every time there is a commit in your GitHub repository.

Activities

- Deploy a new cluster

- Create the Hugo site

- Create the GitHub repository for the Hugo site

- Create the wercker.yml file

- Create the kubeconfig template file

- Deploy the Hugo site

- Configure the Wercker pipeline

- Configure the required environment variables

- Test the Wercker pipeline

- Delete the cluster

Warning: Some of the AWS resources that will be created in the following lab are not eligible for the AWS Free Tier and therefore will cost you money. For example, running a three node cluster with the suggested instance size of t2.medium will cost you around $0.20 per hour based on current pricing.

Prerequisites

Review the Getting Started section in the introductory post of this blog series

Log into the Vagrant box or your prepared local host environment

Update and then load the required environment variables:

# Must change: Your domain name that is hosted in AWS Route 53

export DOMAIN_NAME="k8s.kumorilabs.com"

# Friendly name to use as an alias for your cluster

export CLUSTER_ALIAS="usa"

# Leave as-is: Full DNS name of you cluster

export CLUSTER_FULL_NAME="${CLUSTER_ALIAS}.${DOMAIN_NAME}"

# AWS availability zone where the cluster will be created

export CLUSTER_AWS_AZ="us-east-1a"

# Leave as-is: AWS Route 53 hosted zone ID for your domain

export DOMAIN_NAME_ZONE_ID=$(aws route53 list-hosted-zones \

| jq -r '.HostedZones[] | select(.Name=="'${DOMAIN_NAME}'.") | .Id' \

| sed 's/\/hostedzone\///')

Implementation

Deploy a new cluster

Create the S3 bucket in AWS, which will be used by Kops for cluster configuration storage:

aws s3api create-bucket --bucket ${CLUSTER_FULL_NAME}-state

Set the KOPS_STATE_STORE variable to the URL of the S3 bucket that was just created:

export KOPS_STATE_STORE="s3://${CLUSTER_FULL_NAME}-state"

Create the cluster with Kops:

kops create cluster \

--name=${CLUSTER_FULL_NAME} \

--zones=${CLUSTER_AWS_AZ} \

--master-size="t2.medium" \

--node-size="t2.medium" \

--node-count="2" \

--dns-zone=${DOMAIN_NAME} \

--ssh-public-key="~/.ssh/id_rsa.pub" \

--kubernetes-version="1.7.6" --yes

It will take approximately 5 minutes for the cluster to be ready. To check if the cluster is ready:

kubectl get nodes

NAME STATUS AGE VERSION

ip-172-20-48-9.ec2.internal Ready 4m v1.7.6

ip-172-20-55-48.ec2.internal Ready 2m v1.7.6

ip-172-20-58-241.ec2.internal Ready 3m v1.7.6

Create the Hugo site

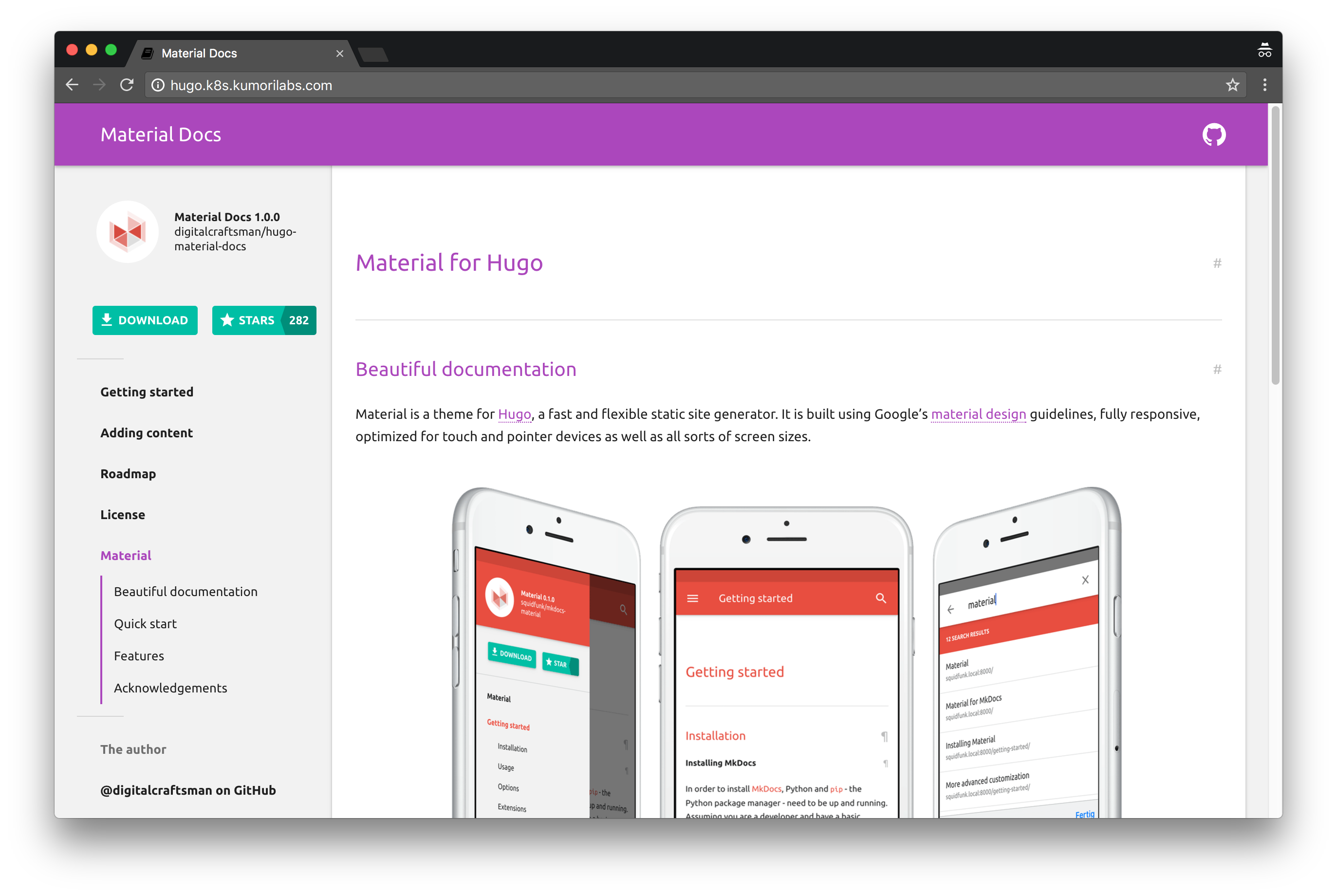

In this lab, we will create a Hugo site with the same theme (Material Docs by Digitalcraftsman) that we used in previous labs.

From the root of the repository, execute the following, which will:

- Create a new blank Hugo site in the

hugo-app-wercker/folder - Clone the Material Docs Hugo theme to the themes folder (

hugo-app-wercker/themes) - Copy the example content from the Material Docs theme to the root of the Hugo site

- Remove the Git folder from the Material Docs theme

- Change the value of the

baseurlin the Hugo config file to ensure the site will work with any domain

hugo new site hugo-app-wercker/

git clone https://github.com/digitalcraftsman/hugo-material-docs.git \

hugo-app-wercker/themes/hugo-material-docs

cp -rf hugo-app-wercker/themes/hugo-material-docs/exampleSite/* hugo-app-wercker/

rm -rf hugo-app-wercker/themes/hugo-material-docs/.git/

sed -i -e 's|baseurl =.*|baseurl = "/"|g' hugo-app-wercker/config.toml

Create the GitHub repository for the Hugo site

Configure your global Git settings with your username, email and set the password cache to 60 minutes:

# Set your GitHub username and email

export GITHUB_USERNAME="smesch"

export GITHUB_EMAIL="steven@kumorilabs.com"

git config --global user.name "${GITHUB_USERNAME}"

git config --global user.email "${GITHUB_EMAIL}"

git config --global credential.helper cache

git config --global credential.helper 'cache --timeout=3600'

Create a new GitHub repository called hugo-app-wercker in your GitHub account (you will be prompted for your GitHub password):

curl -u "${GITHUB_USERNAME}" https://api.github.com/user/repos \

-d '{"name":"hugo-app-wercker"}'

Create the README.md file and then upload the contents of the Hugo site (hugo-app-wercker/) to the GitHub repository you just created (you will be prompted for your GitHub credentials):

echo "# hugo-app-wercker" > hugo-app-wercker/README.md

git -C hugo-app-wercker/ init

git -C hugo-app-wercker/ add .

git -C hugo-app-wercker/ commit -m "Create Hugo site repository"

git -C hugo-app-wercker/ remote add origin \

https://github.com/${GITHUB_USERNAME}/hugo-app-wercker.git

git -C hugo-app-wercker/ push -u origin master

You now have your own repository populated with the Hugo site content.

Create the wercker.yml file

Now let’s have a look at the wercker.yml file that we will add to your repository:

box: ruby

build:

steps:

- arjen/hugo-build:

version: "0.20"

flags: --uglyURLs

test:

steps:

- kyleboyle/html-proofer-test:

version: "2.3.0"

basedir: public

arguments: --checks-to-ignore ImageCheck --only-4xx

push:

box:

id: nginx:alpine

cmd: /bin/sh

steps:

- script:

name: Copy Hugo output to NGINX root

code: |

cp -a ${WERCKER_ROOT}/public/* /usr/share/nginx/html

- internal/docker-push:

cmd: nginx -g "daemon off;"

username: ${DOCKER_USERNAME}

password: ${DOCKER_PASSWORD}

tag: ${WERCKER_GIT_COMMIT}

repository: ${DOCKER_USERNAME}/${DOCKER_IMAGE_NAME}

registry: https://registry.hub.docker.com

deploy:

steps:

- script:

name: Kubeconfig template file population

code: |

sed -i -e 's|KUBE_CA_CERT|'"${KUBE_CA_CERT}"'|g' kubeconfig

sed -i -e 's|KUBE_ENDPOINT|'"${KUBE_ENDPOINT}"'|g' kubeconfig

sed -i -e 's|KUBE_ADMIN_CERT|'"${KUBE_ADMIN_CERT}"'|g' kubeconfig

sed -i -e 's|KUBE_ADMIN_KEY|'"${KUBE_ADMIN_KEY}"'|g' kubeconfig

sed -i -e 's|KUBE_USERNAME|'"${KUBE_USERNAME}"'|g' kubeconfig

- kubectl:

server: ${KUBE_ENDPOINT}

username: ${KUBE_USERNAME}

command: --kubeconfig kubeconfig set image deployment/${K8S_DEPLOYMENT_NAME} ${K8S_DEPLOYMENT_NAME}=${DOCKER_USERNAME}/${DOCKER_IMAGE_NAME}:${WERCKER_GIT_COMMIT}

- script:

name: Remove kubeconfig file

code: rm kubeconfig

Breakdown of the wercker.yml file

- Set the base box to use the ruby:latest Docker image

- Run pre-built steps from the Steps Registry

- Step hugo-build will build the Hugo site from your repository and output the static HTML to the default folder

public - Step html-proofer-test will test the external links in the static HTML files in the

publicfolder - For the deploy pipeline, use the nginx:alpine Docker image

- Copy the static HTML files from the

publicfolder to the default NGINX folder - Build a new Docker image using the underlying NGINX box

- Push the new Docker image to your Docker Hub account using the GIT commit hash as the tag

- Populate the kubeconfig template file with the value of the env variables we will configure for cluster authentication

- Start a rolling update for the Kubernetes Deployment, using the new Docker image with the GIT commit hash tag

Copy the wercker.yml file to your GitHub repo folder:

cp github/hugo-app-wercker/wercker.yml hugo-app-wercker/wercker.yml

Create the kubeconfig template file

And below is the kubeconfig template file that will be used by Wercker to authenticate with your Kubernetes cluster:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: KUBE_CA_CERT

server: KUBE_ENDPOINT

name: k8s-cluster

contexts:

- context:

cluster: k8s-cluster

user: k8s-cluster

name: k8s-cluster

current-context: k8s-cluster

kind: Config

preferences: {}

users:

- name: k8s-cluster

user:

client-certificate-data: KUBE_ADMIN_CERT

client-key-data: KUBE_ADMIN_KEY

username: KUBE_USERNAME

There are five placeholders in the kubeconfig template file, which will be replaced by the values we will configure for environment variables in the Wercker job configuration:

Kubeconfig Placeholders

- certificate-authority-data: KUBE_CA_CERT

- server: KUBE_ENDPOINT

- client-certificate-data: KUBE_ADMIN_CERT

- client-key-data: KUBE_ADMIN_KEY

- username: KUBE_USERNAME

Copy the kubeconfig to your GitHub repo folder:

cp github/hugo-app-wercker/kubeconfig hugo-app-wercker/kubeconfig

And finally, commit the two files to GitHub:

git -C hugo-app-wercker/ add .

git -C hugo-app-wercker/ commit -m "Upload wercker.yml & kubeconfig"

git -C hugo-app-wercker/ push -u origin master

Deploy the Hugo site

Create the initial Deployment of the Hugo site:

kubectl create -f ./kubernetes/hugo-app/

deployment "hugo-app" created

service "hugo-app-svc" created

Wait about a minute for the Service to create the AWS ELB and then create the DNS CNAME record in your Route 53 domain with a prefix of hugo (e.g., hugo.k8s.kumorilabs.com), using the dns-record-single.json template file in the repository:

# Set the DNS record prefix & the Service name and then retrieve the ELB URL

export DNS_RECORD_PREFIX="hugo"

export SERVICE_NAME="hugo-app-svc"

export HUGO_APP_ELB=$(kubectl get svc/${SERVICE_NAME} \

--template="{{range .status.loadBalancer.ingress}} {{.hostname}} {{end}}")

# Add to JSON file

sed -i -e 's|"Name": ".*|"Name": "'"${DNS_RECORD_PREFIX}.${DOMAIN_NAME}"'",|g' \

scripts/apps/dns-records/dns-record-single.json

sed -i -e 's|"Value": ".*|"Value": "'"${HUGO_APP_ELB}"'"|g' \

scripts/apps/dns-records/dns-record-single.json

# Create DNS records

aws route53 change-resource-record-sets \

--hosted-zone-id ${DOMAIN_NAME_ZONE_ID} \

--change-batch file://scripts/apps/dns-records/dns-record-single.json

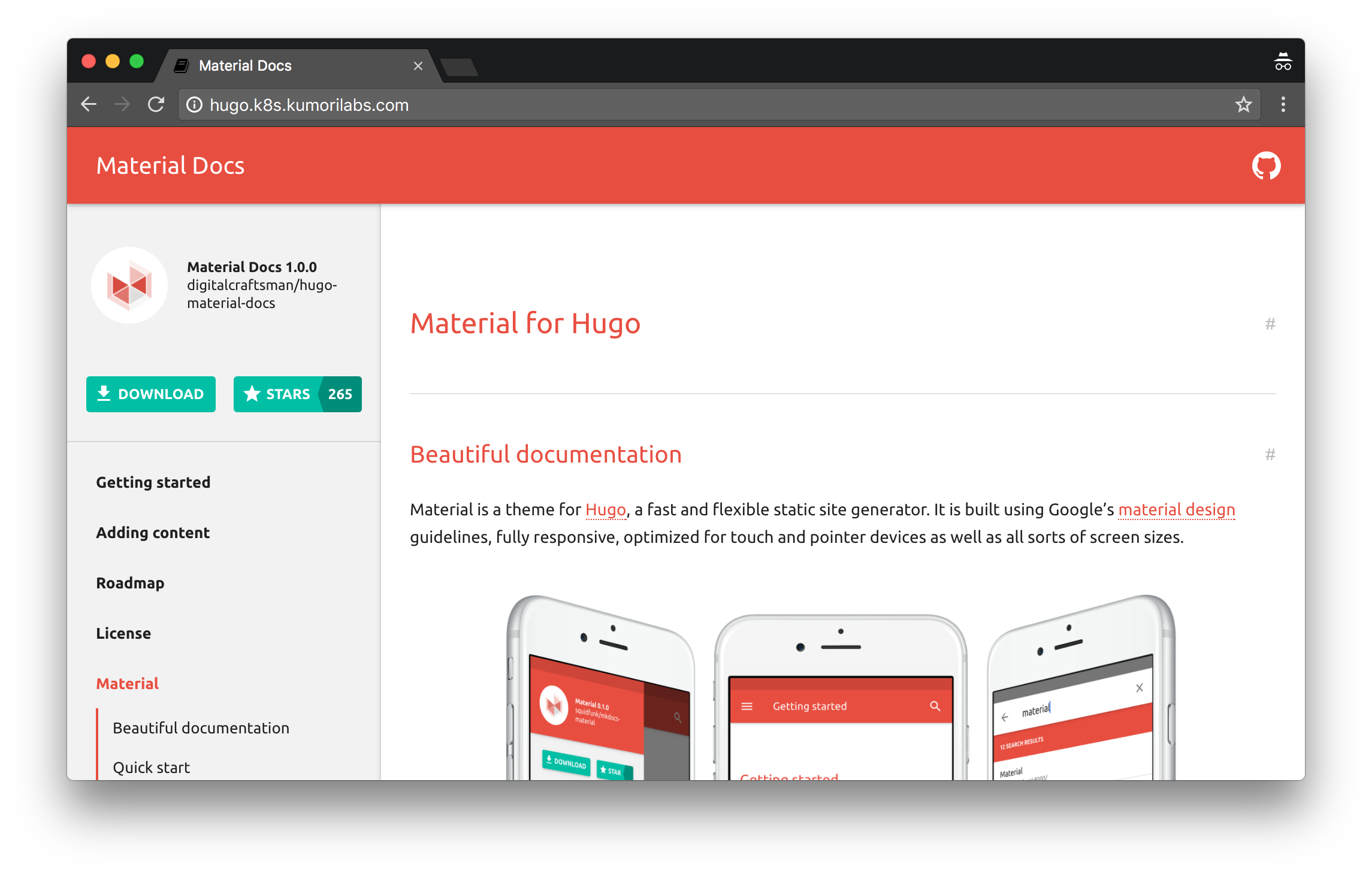

The Hugo site should now be reachable at the DNS name you just created (e.g., hugo.k8s.kumorilabs.com) and as we used the default 1.0 Docker image tag, the theme will be red:

Configure the Wercker pipeline

Let’s now configure the pipeline in Wercker.

Browse to the Wercker site:

- Login with your GitHub credentials

- Click Create in the navigation bar and choose Application

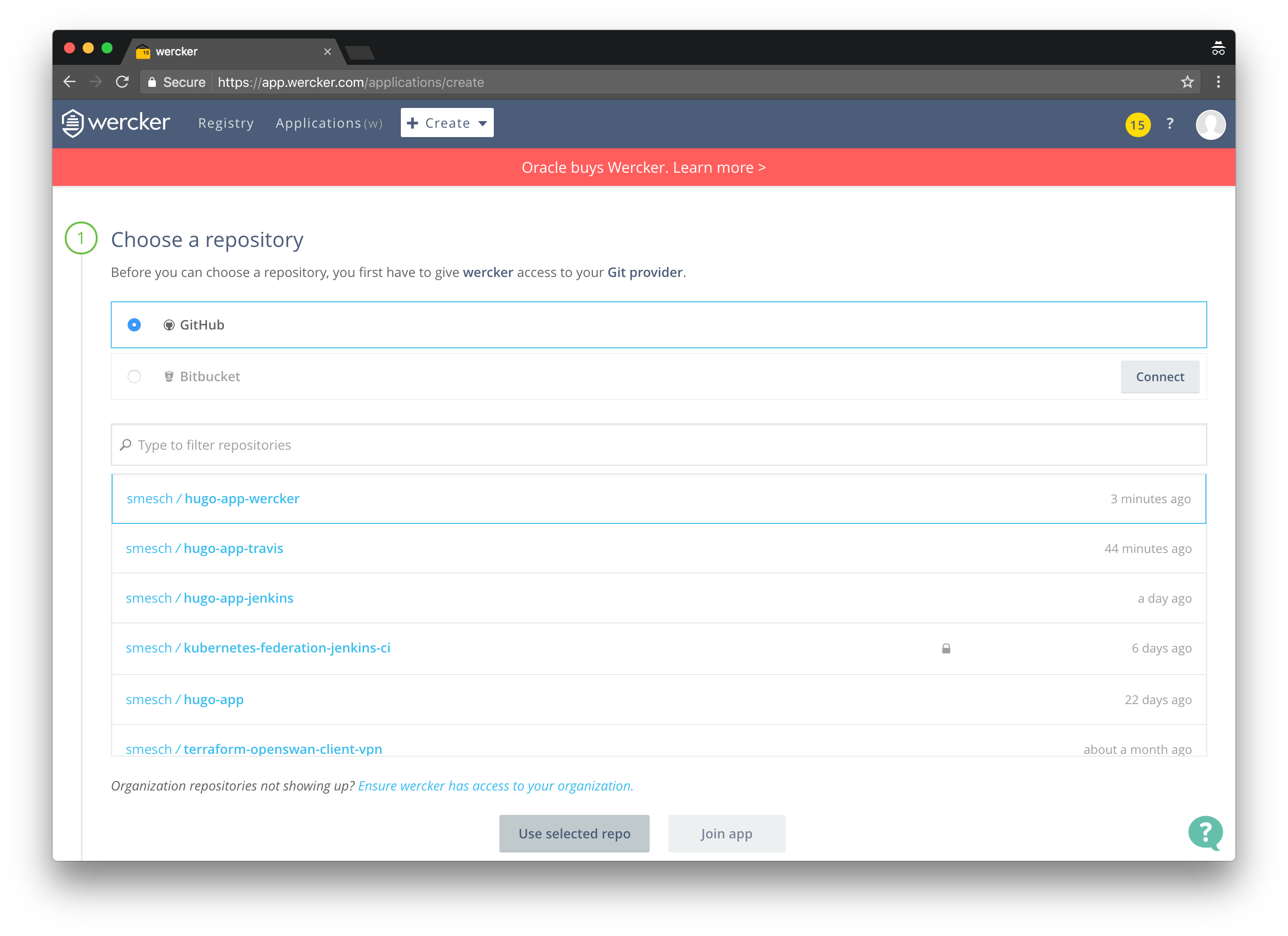

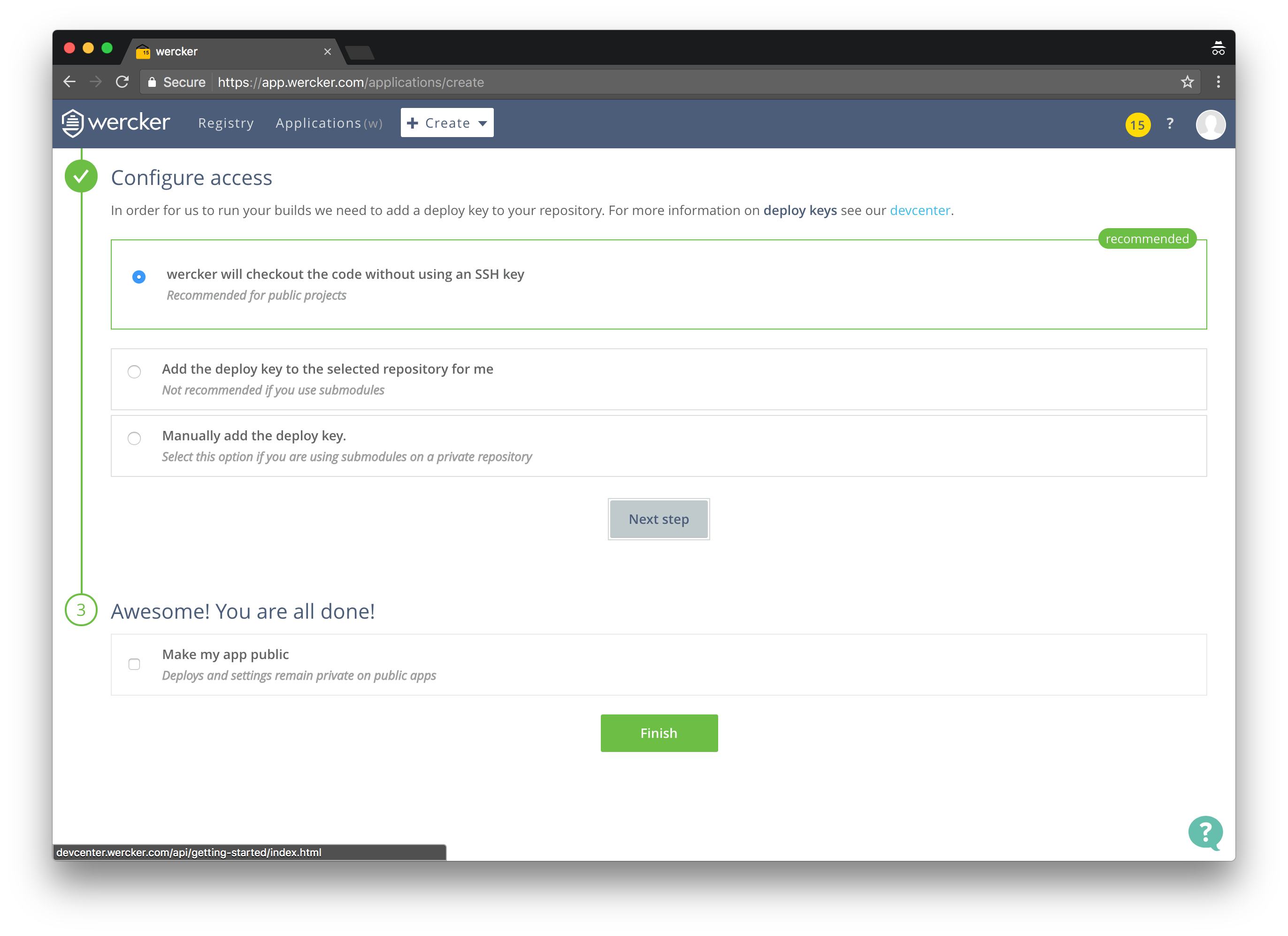

- Click GitHub, select your hugo-app-wercker repository and then click Use selected repo

- Select wercker will checkout the code without using an SSH key, click Next step and then Finish

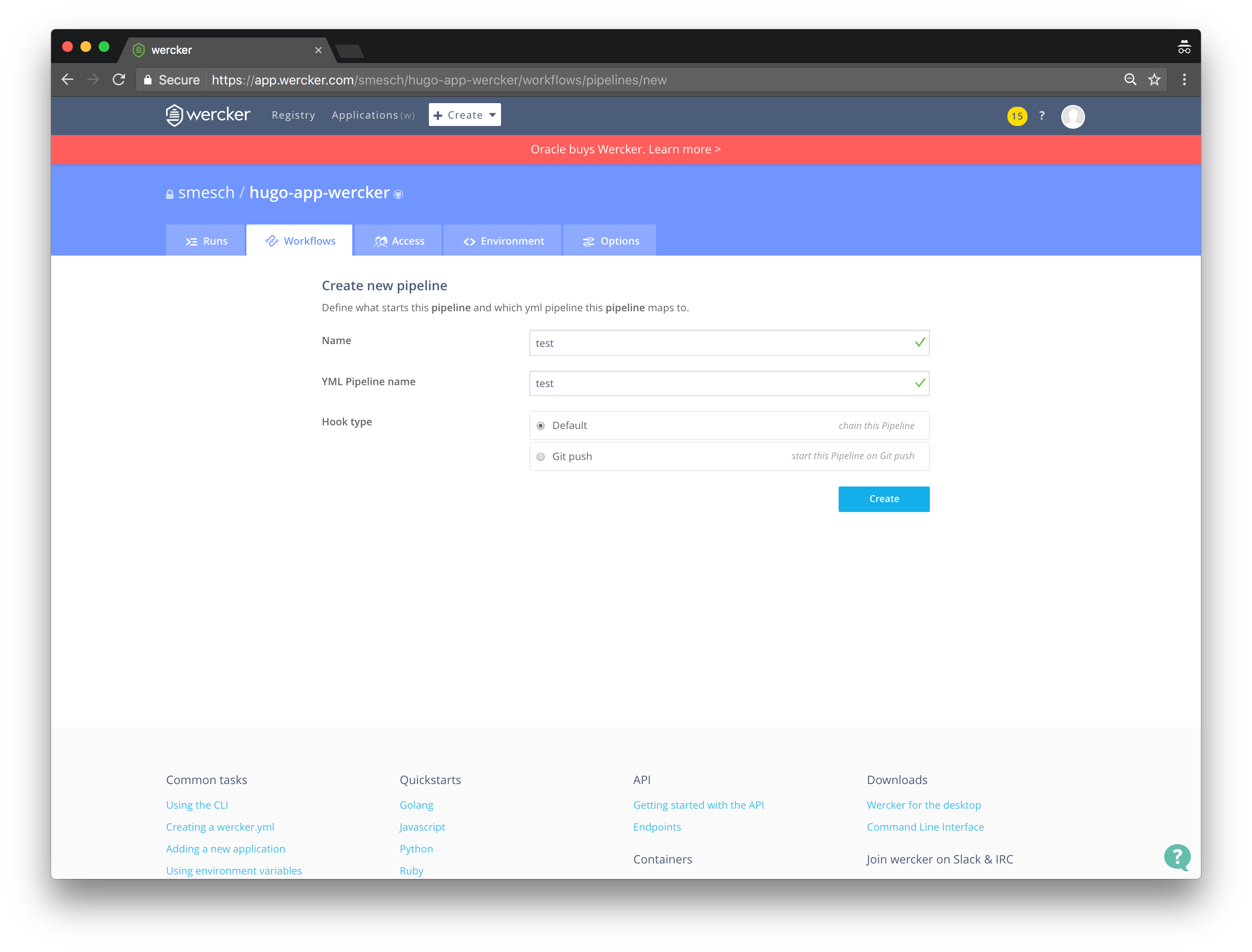

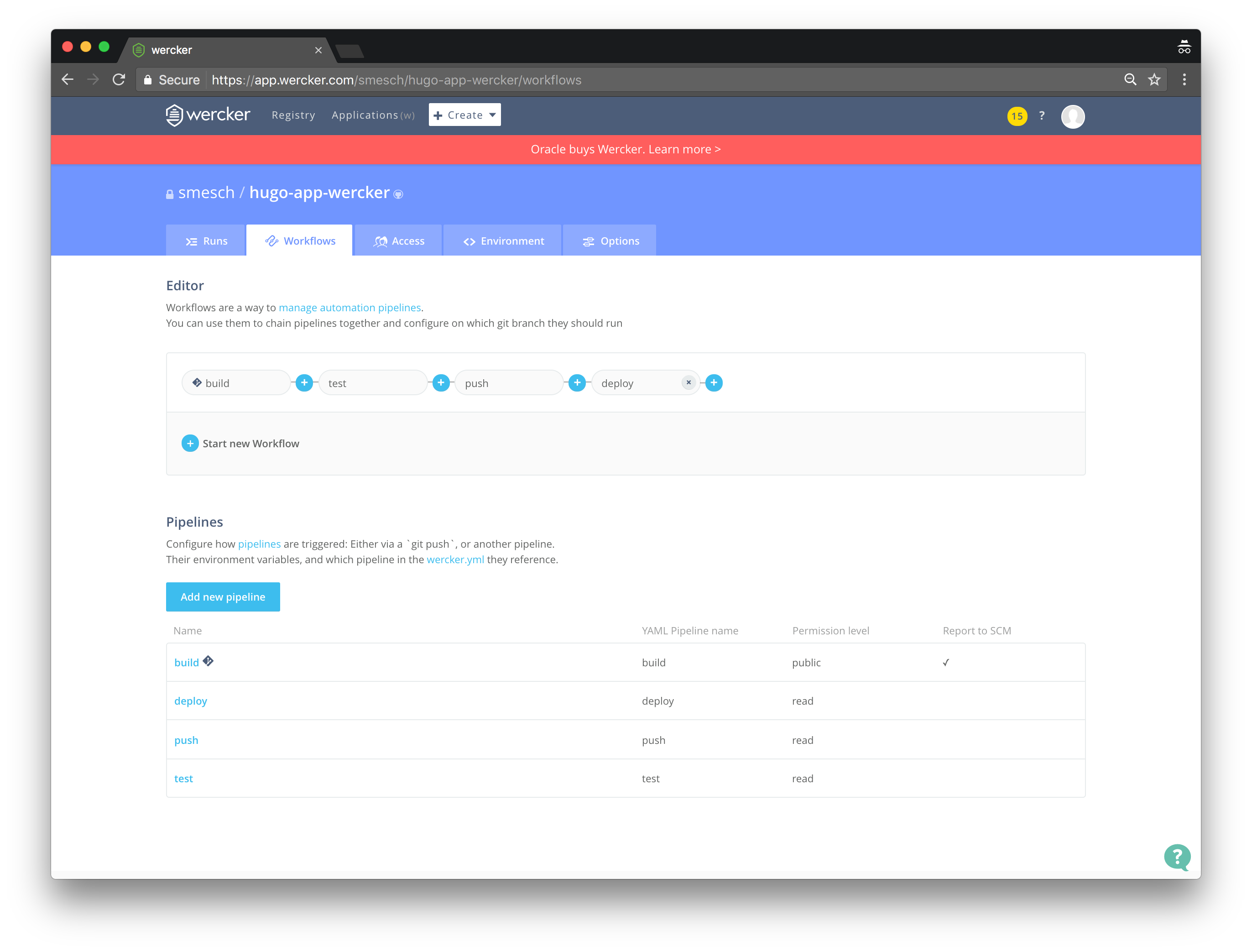

Now let’s setup the workflow in Wercker to reflect the configuration in the wercker.yml file. Start by creating the Test pipeline:

Test Pipeline

- Click on the Workflows tab

- Click Add new pipeline in the Pipelines section

- Enter test for Name and YML Pipeline Name

- Select Default for Hook Type and click Create

Now let’s add the remaining two pipelines for Push and Deploy

Push Pipeline

- Click on the Workflows tab

- Click Add new pipeline in the Pipelines section

- Enter push for Name and YML Pipeline Name

- Select Default for Hook Type and click Create

Deploy Pipeline

- Click on the Workflows tab

- Click Add new pipeline in the Pipelines section

- Enter deploy for Name and YML Pipeline Name

- Select Default for Hook Type and click Create

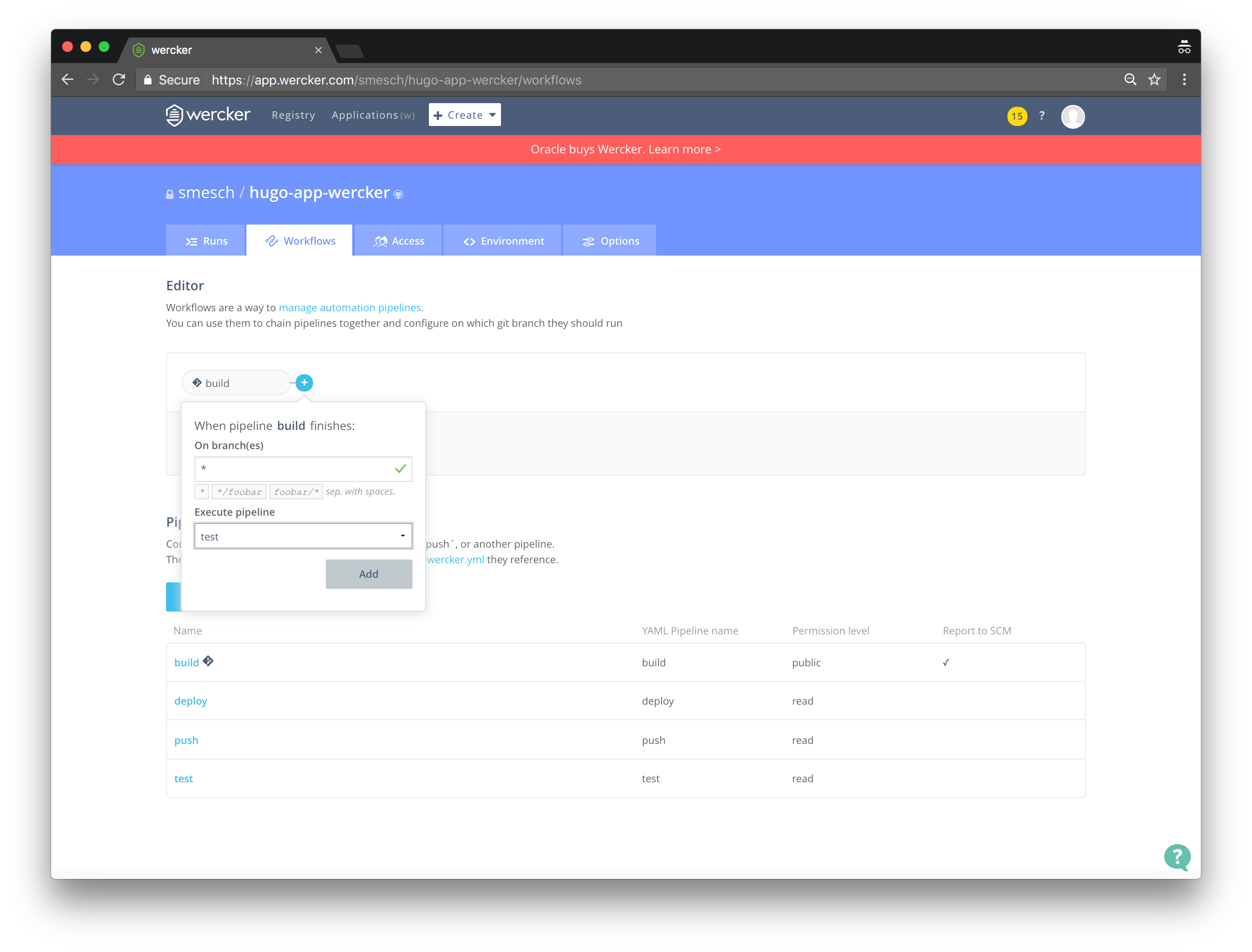

Now chain together the four pipelines to complete the workflow:

Workflow Editor

- Click on the Workflows tab

- Click the plus (+) symbol next to build in the Editor section

- Select test from the Execute pipeline dropdown menu and click Add

- Click the plus (+) symbol next to test in the Editor section

- Select push from the Execute pipeline dropdown menu and click Add

- Click the plus (+) symbol next to push in the Editor section

- Select deploy from the Execute pipeline dropdown menu and click Add

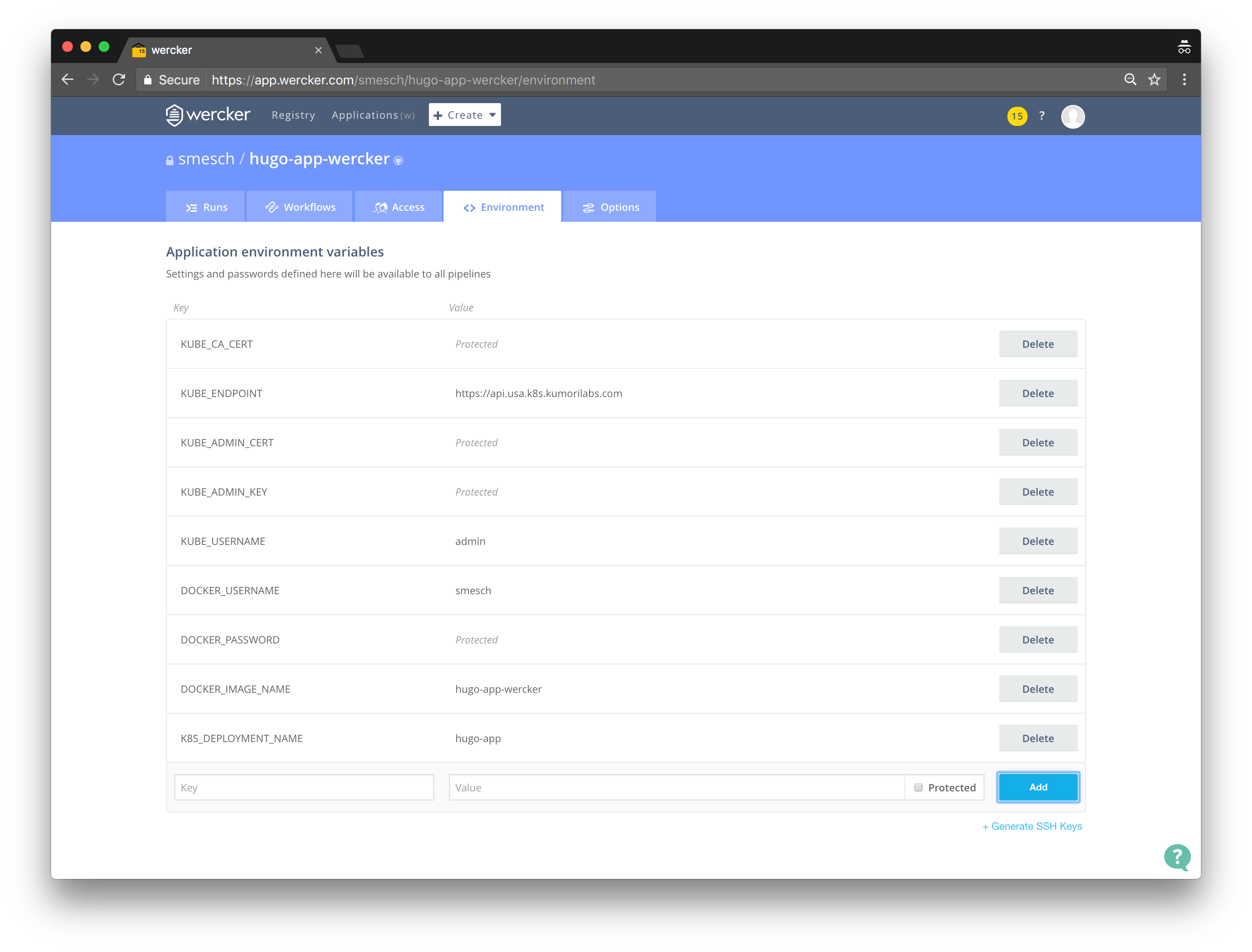

Configure the required environment variables

Before we populate the required environment variables for Docker Hub and your Kubernetes cluster, we need to retrieve some information from the kubeconfig file for your cluster. We will use kubectl to extract the required values from your kubeconfig and set them to local environment variables on your host:

export KUBE_CA_CERT=$(kubectl config view --flatten --output=json \

| jq --raw-output '.clusters[0] .cluster ["certificate-authority-data"]')

export KUBE_ENDPOINT=$(kubectl config view --flatten --output=json \

| jq --raw-output '.clusters[0] .cluster ["server"]')

export KUBE_ADMIN_CERT=$(kubectl config view --flatten --output=json \

| jq --raw-output '.users[0] .user ["client-certificate-data"]')

export KUBE_ADMIN_KEY=$(kubectl config view --flatten --output=json \

| jq --raw-output '.users[0] .user ["client-key-data"]')

export KUBE_USERNAME=$(kubectl config view --flatten --output=json \

| jq --raw-output '.users[0] .user ["username"]')

You can now echo out each of these local variables to retrieve the values and then copy/paste them into the Application environment variables section on the Environment tab:

echo "KUBE_CA_CERT = $KUBE_CA_CERT"

echo "KUBE_ENDPOINT = $KUBE_ENDPOINT"

echo "KUBE_ADMIN_CERT = $KUBE_ADMIN_CERT"

echo "KUBE_ADMIN_KEY = $KUBE_ADMIN_KEY"

echo "KUBE_USERNAME = $KUBE_USERNAME"

In addition to the five environment variables for your Kubernetes cluster, you also need to add the following (click the Protected checkbox for any sensitive information):

DOCKER_USERNAMEDOCKER_PASSWORDDOCKER_IMAGE_NAME(hugo-app-wercker)K8S_DEPLOYMENT_NAME(hugo-app)

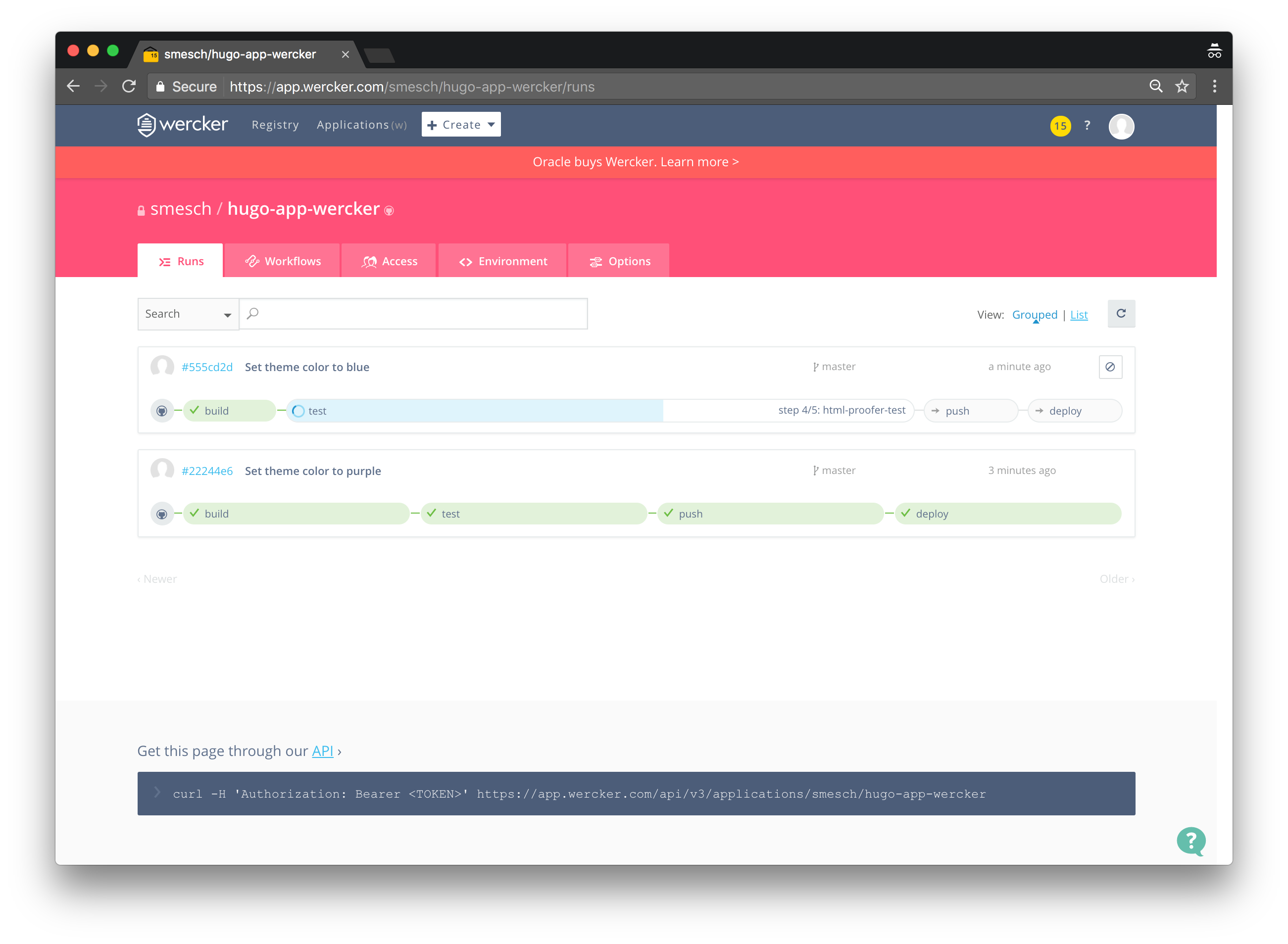

Test the Wercker pipeline

We will now change the theme color to purple in the Hugo config file and then commit the change to your GitHub repository (you will be prompted for your GitHub credentials):

export HUGO_APP_TAG="purple"

sed -i -e 's|primary = .*|primary = "'"${HUGO_APP_TAG}"'"|g' \

hugo-app-wercker/config.toml

git -C hugo-app-wercker/ pull

git -C hugo-app-wercker/ commit -a -m "Set theme color to ${HUGO_APP_TAG}"

git -C hugo-app-wercker/ push -u origin master

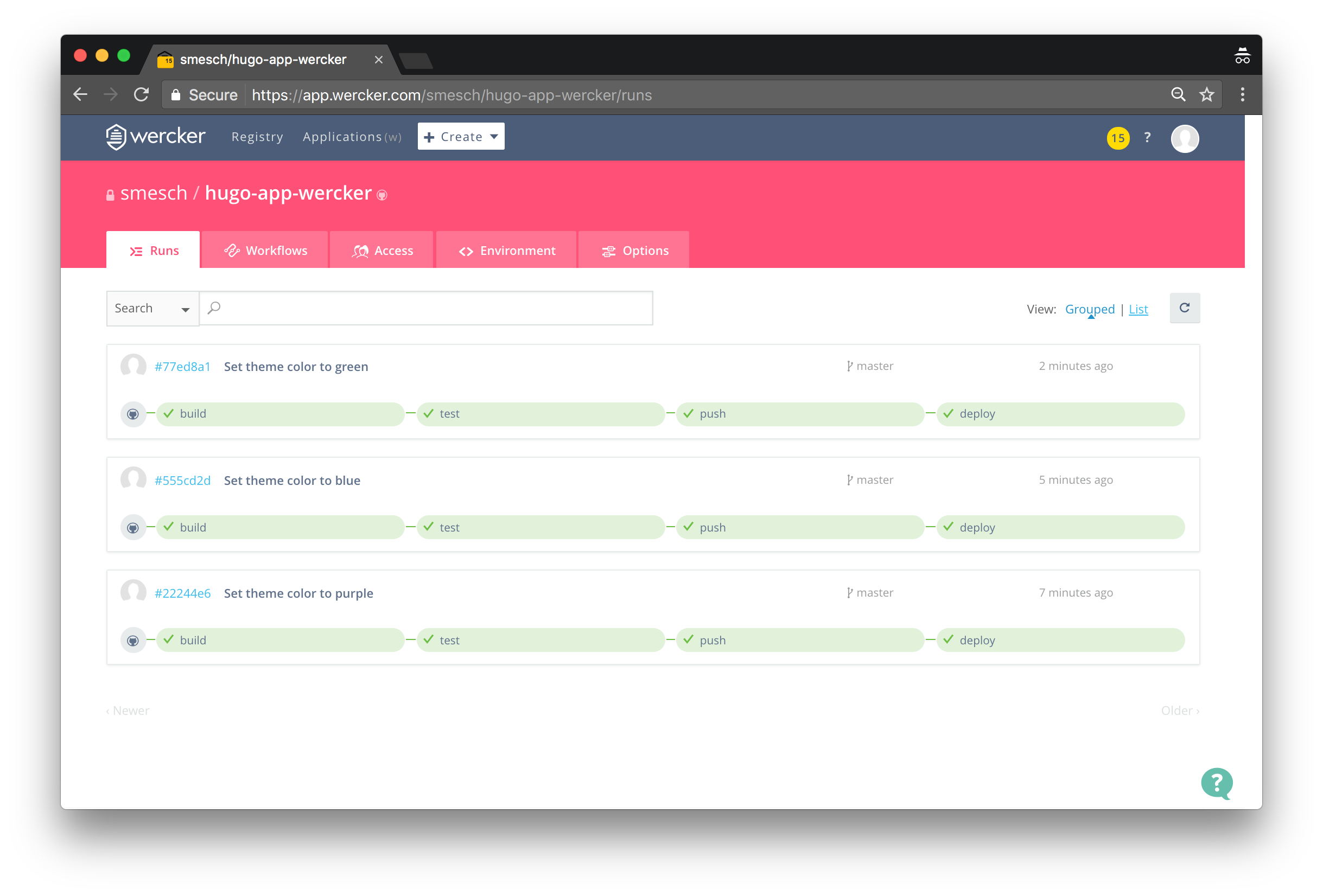

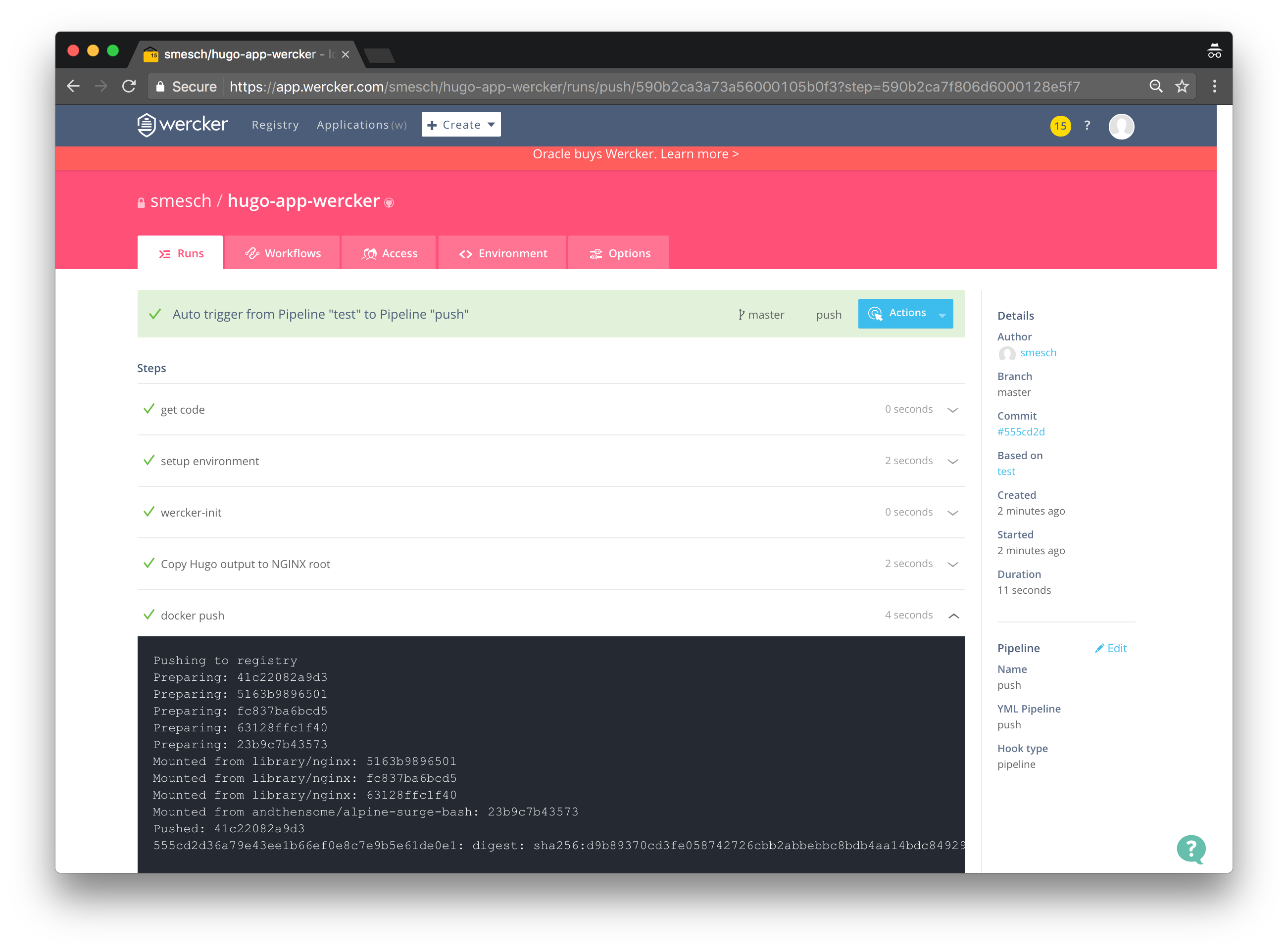

Within a few seconds of committing the change to GitHub, the Wercker pipeline should be started automatically. You can see the pipeline running in real-time by clicking on the Runs tab of the repository on the Wercker site:

And when it completes, the theme color of the site will be purple:

You now have a fully functional continuous deployment pipeline setup in Wercker.

Cleanup

Before proceeding to the next lab, delete the cluster and it’s associated S3 bucket:

Delete the cluster

Delete the cluster:

kops delete cluster ${CLUSTER_FULL_NAME} --yes

Delete the S3 bucket in AWS:

aws s3api delete-bucket --bucket ${CLUSTER_FULL_NAME}-state

In addition to the step-by-step instructions provided for each lab, the repository also contains scripts to automate some of the activities being performed in this blog series. See the Using Scripts guide for more details.

Next Up

In the next lab, Lab #10: Setup Kubernetes Federation Between Clusters in Different AWS Regions, we will go through the following:

- Deploying three clusters in different AWS regions

- Creating a federation with the three clusters

- Creating a federated Deployment

Other Labs in the Series

- Introduction: A Blog Series About All Things Kubernetes

- Lab #1: Deploy a Kubernetes Cluster in AWS with Kops

- Lab #2: Maintaining your Kubernetes Cluster

- Lab #3: Creating Deployments & Services in Kubernetes

- Lab #4: Kubernetes Deployment Strategies: Rolling Updates, Canary & Blue-Green

- Lab #5: Setup Horizontal Pod & Cluster Autoscaling in Kubernetes

- Lab #6: Integrating Jenkins and Kubernetes

- Lab #7: Continuous Deployment with Jenkins and Kubernetes

- Lab #8: Continuous Deployment with Travis CI and Kubernetes

- Lab #10: Setup Kubernetes Federation Between Clusters in Different AWS Regions