By Steven Eschinger | May 11, 2017

This post was updated on September 18th, 2017 for Kubernetes version 1.7.6 & Kops version 1.7.0

Introduction

Kubernetes Cluster Federation, which was first released in version 1.3 back in July 2016, allows you to federate multiple clusters together and then control them as a single entity using a Federation control plane. Federation supports adding clusters located in different regions within the same cloud provider network, clusters spanning across multiple cloud providers and can even include on-premise clusters.

Some of the use cases for Federation are:

- Geographically Distributed Deployments: Spread Deployments across clusters in different parts of the world

- Hybrid Cloud: Extend Deployments from on-premise clusters to the cloud

- Higher Availability: Ability to federate clusters across different regions/cloud providers

- Application Migration: Simplify the migration of applications from on-premise to the cloud or between cloud providers

In this lab, we will deploy clusters in three different AWS regions:

- USA: N. Virgina (us-east-1)

- Europe: Ireland (eu-west-1)

- Japan: Tokyo (ap-northeast-1)

We will deploy the Federation control plane to the USA cluster (host cluster) and then add all three clusters to the Federation. We will then create a federated Deployment for the same Hugo site we have used in previous labs. By default, the Pods for the federated Deployment will be spread out evenly across the three clusters.

And finally, we will create latency-based DNS records in Route 53, one for each cluster region. This will result in a globally distributed Deployment where end users are automatically routed to the nearest cluster based on proximity.

Activities

- Deploy the USA cluster

- Deploy the Europe cluster

- Deploy the Japan cluster

- Check the status of the clusters

- Create the Federation

- Create the federated Deployment for the Hugo site

- Create the latency-based DNS records

- Delete the clusters

Warning: Some of the AWS resources that will be created in the following lab are not eligible for the AWS Free Tier and therefore will cost you money. For example, running a three node cluster with the suggested instance size of t2.medium will cost you around $0.20 per hour based on current pricing.

Prerequisites

Review the Getting Started section in the introductory post of this blog series

Log into the Vagrant box or your prepared local host environment

Update and then load the required environment variables:

# Must change: Your domain name that is hosted in AWS Route 53

export DOMAIN_NAME="k8s.kumorilabs.com"

# Leave as-is: AWS Route 53 hosted zone ID for your domain

export DOMAIN_NAME_ZONE_ID=$(aws route53 list-hosted-zones \

| jq -r '.HostedZones[] | select(.Name=="'${DOMAIN_NAME}'.") | .Id' \

| sed 's/\/hostedzone\///')

Implementation

Deploy the USA cluster

Set the environment variables for the USA cluster:

# Friendly name to use as an alias for the USA cluster

export USA_CLUSTER_ALIAS="usa"

# Leave as-is: Full DNS name of the USA cluster

export USA_CLUSTER_FULL_NAME="${USA_CLUSTER_ALIAS}.${DOMAIN_NAME}"

# AWS region where the USA cluster will be created

export USA_CLUSTER_AWS_REGION="us-east-1"

# AWS availability zone where the USA cluster will be created

export USA_CLUSTER_AWS_AZ="us-east-1a"

Create the first S3 bucket in AWS, which will be used by Kops for cluster configuration storage for the USA cluster:

aws s3api create-bucket --bucket ${USA_CLUSTER_FULL_NAME}-state

Create the USA cluster with Kops, specifying the URL to the KOPS state store. To keep costs down, --node-count has been reduced to 1:

kops create cluster \

--name=${USA_CLUSTER_FULL_NAME} \

--zones=${USA_CLUSTER_AWS_AZ} \

--master-size="t2.medium" \

--node-size="t2.medium" \

--node-count="1" \

--dns-zone=${DOMAIN_NAME} \

--ssh-public-key="~/.ssh/id_rsa.pub" \

--state="s3://${USA_CLUSTER_FULL_NAME}-state" \

--kubernetes-version="1.7.6" --yes

Create the usa cluster context alias for the cluster in your kubeconfig file:

kubectl config set-context ${USA_CLUSTER_ALIAS} \

--cluster=${USA_CLUSTER_FULL_NAME} --user=${USA_CLUSTER_FULL_NAME}

Deploy the Europe cluster

Set the environment variables for the Europe cluster:

# Friendly name to use as an alias for the Europe cluster

export EUR_CLUSTER_ALIAS="eur"

# Leave as-is: Full DNS name of the Europe cluster

export EUR_CLUSTER_FULL_NAME="${EUR_CLUSTER_ALIAS}.${DOMAIN_NAME}"

# AWS region where the Europe cluster will be created

export EUR_CLUSTER_AWS_REGION="eu-west-1"

# AWS availability zone where the Europe cluster will be created

export EUR_CLUSTER_AWS_AZ="eu-west-1a"

Create the second S3 bucket in AWS, which will be used by Kops for cluster configuration storage for the Europe cluster:

aws s3api create-bucket --bucket ${EUR_CLUSTER_FULL_NAME}-state

Create the Europe cluster with Kops, specifying the URL to the KOPS state store:

kops create cluster \

--name=${EUR_CLUSTER_FULL_NAME} \

--zones=${EUR_CLUSTER_AWS_AZ} \

--master-size="t2.medium" \

--node-size="t2.medium" \

--node-count="1" \

--dns-zone=${DOMAIN_NAME} \

--ssh-public-key="~/.ssh/id_rsa.pub" \

--state="s3://${EUR_CLUSTER_FULL_NAME}-state" \

--kubernetes-version="1.7.6" --yes

Create the eur cluster context alias for the cluster in your kubeconfig file:

kubectl config set-context ${EUR_CLUSTER_ALIAS} \

--cluster=${EUR_CLUSTER_FULL_NAME} --user=${EUR_CLUSTER_FULL_NAME}

Deploy the Japan cluster

Set the environment variables for the Japan cluster:

# Friendly name to use as an alias for the Japan cluster

export JPN_CLUSTER_ALIAS="jpn"

# Leave as-is: Full DNS name of the Japan cluster

export JPN_CLUSTER_FULL_NAME="${JPN_CLUSTER_ALIAS}.${DOMAIN_NAME}"

# AWS region where the Japan cluster will be created

export JPN_CLUSTER_AWS_REGION="ap-northeast-1"

# AWS availability zone where the Japan cluster will be created

export JPN_CLUSTER_AWS_AZ="ap-northeast-1a"

Create the third S3 bucket in AWS, which will be used by Kops for cluster configuration storage for the Japan cluster:

aws s3api create-bucket --bucket ${JPN_CLUSTER_FULL_NAME}-state

Create the Japan cluster with Kops, specifying the URL to the KOPS state store:

kops create cluster \

--name=${JPN_CLUSTER_FULL_NAME} \

--zones=${JPN_CLUSTER_AWS_AZ} \

--master-size="t2.medium" \

--node-size="t2.medium" \

--node-count="1" \

--dns-zone=${DOMAIN_NAME} \

--ssh-public-key="~/.ssh/id_rsa.pub" \

--state="s3://${JPN_CLUSTER_FULL_NAME}-state" \

--kubernetes-version="1.7.6" --yes

Create the jpn cluster context alias for the cluster in your kubeconfig file:

kubectl config set-context ${JPN_CLUSTER_ALIAS} \

--cluster=${JPN_CLUSTER_FULL_NAME} --user=${JPN_CLUSTER_FULL_NAME}

Check the status of the clusters

Before creating the Federation, check to make sure that all three clusters are ready. As there are multiple clusters defined in your kubeconfig file, you need to specify which context to use when executing kubectl commands:

kubectl --context=usa get nodes

NAME STATUS AGE VERSION

ip-172-20-32-197.ec2.internal Ready 5m v1.7.6

ip-172-20-41-122.ec2.internal Ready 3m v1.7.6

kubectl --context=eur get nodes

NAME STATUS AGE VERSION

ip-172-20-52-25.eu-west-1.compute.internal Ready 2m v1.7.6

ip-172-20-62-54.eu-west-1.compute.internal Ready 3m v1.7.6

kubectl --context=jpn get nodes

NAME STATUS AGE VERSION

ip-172-20-35-253.ap-northeast-1.compute.internal Ready 3m v1.7.6

ip-172-20-49-184.ap-northeast-1.compute.internal Ready 2m v1.7.6

Create the Federation

Now that all three clusters are ready, we can create the Federation. The USA cluster will be the host cluster, hosting the components that make up the Federation control plane.

Set the name of the Federation:

export FEDERATION_NAME="fed"

Change to the usa cluster context:

kubectl config use-context ${USA_CLUSTER_ALIAS}

Switched to context "usa".

Deploy the Federation control plane to the host cluster (it will take a couple minutes to initialize):

kubefed init ${FEDERATION_NAME} --host-cluster-context=${USA_CLUSTER_ALIAS} \

--dns-provider=aws-route53 --dns-zone-name=${DOMAIN_NAME}

Creating a namespace federation-system for federation system components... done

Creating federation control plane service..... done

Creating federation control plane objects (credentials, persistent volume claim)... done

Creating federation component deployments... done

Updating kubeconfig... done

Waiting for federation control plane to come up................................................................................ done

Federation API server is running at: acf80b28aa2f211e7b4b20a27bea4db1-1048928722.us-east-1.elb.amazonaws.com

Change to the fed cluster context:

kubectl config use-context ${FEDERATION_NAME}

Switched to context "fed".

Join the USA cluster to the Federation:

kubefed join ${USA_CLUSTER_ALIAS} --host-cluster-context=${USA_CLUSTER_ALIAS} \

--cluster-context=${USA_CLUSTER_ALIAS}

cluster "usa" created

Join the Europe & Japan clusters to the Federation:

kubefed join ${EUR_CLUSTER_ALIAS} --host-cluster-context=${USA_CLUSTER_ALIAS} \

--cluster-context=${EUR_CLUSTER_ALIAS}

cluster "eur" created

kubefed join ${JPN_CLUSTER_ALIAS} --host-cluster-context=${USA_CLUSTER_ALIAS} \

--cluster-context=${JPN_CLUSTER_ALIAS}

cluster "jpn" created

Check the status of the clusters in the Federation:

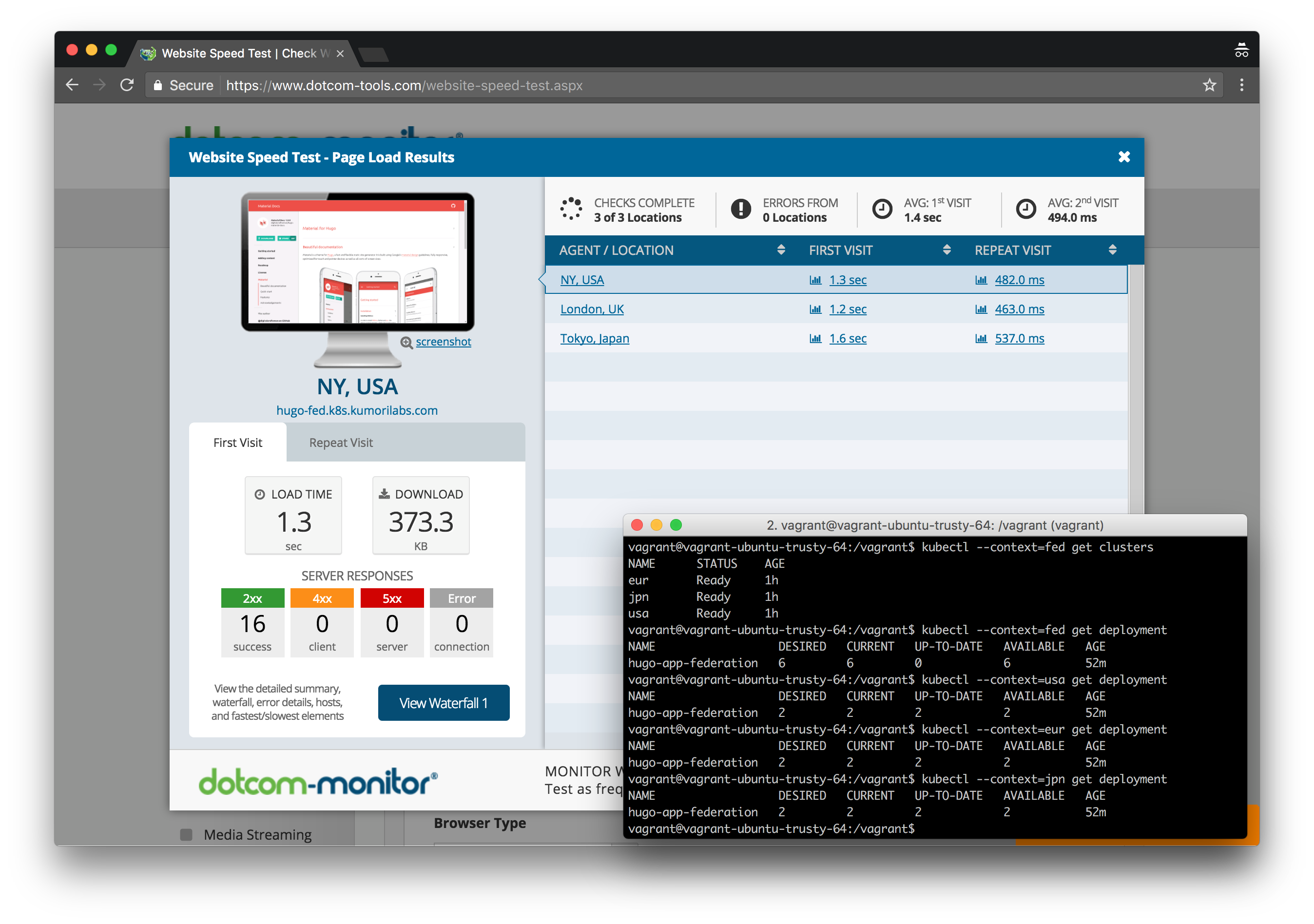

kubectl --context=fed get clusters

NAME STATUS AGE

eur Ready 1m

jpn Ready 1m

usa Ready 3m

Create the default federated namespace:

kubectl --context=fed create namespace default

namespace "default" created

Create the federated Deployment for the Hugo site

Create the federated Deployment for the Hugo site, specifying fed as the context:

kubectl --context=fed create -f ./kubernetes/hugo-app-federation

deployment "hugo-app-federation" created

service "hugo-app-federation-svc" created

The federated Deployment contains six Pods, which will be spread out evenly across the three clusters.

Federated Deployment

kubectl --context=fed get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hugo-app-federation 6 6 0 6 48s

USA Cluster

kubectl --context=usa get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hugo-app-federation 2 2 2 2 46s

Europe Cluster

kubectl --context=eur get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hugo-app-federation 2 2 2 2 54s

Japan Cluster

kubectl --context=jpn get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

hugo-app-federation 2 2 2 2 1m

And the federated Service will create a LoadBalancer Service in each of the three clusters:

Federated Service

kubectl --context=fed get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hugo-app-federation-svc a9d02aafa3504... 80/TCP 11s

kubectl --context=fed describe svc hugo-app-federation-svc

Name: hugo-app-federation-svc

Namespace: default

Labels: app=hugo-app-federation

Annotations: <none>

Selector: app=hugo-app-federation

Type: LoadBalancer

IP:

LoadBalancer Ingress: a9d02aafa350411e7999c0ad4271fa7c-336709007.us-east-1.elb.amazonaws.com, a9d14dd19350411e79b7e0606cff887c-814146953.eu-west-1.elb.amazonaws.com, a9d2ce30d350411e7996f0632c187830-238454266.ap-northeast-1.elb.amazonaws.com

Port: http 80/TCP

Endpoints: <none>

Session Affinity: None

Events: <none>

USA Cluster

kubectl --context=usa get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hugo-app-federation-svc 100.64.77.13 a9d02aafa3504... 80:32256/TCP 47s

kubernetes 100.64.0.1 <none> 443/TCP 27m

Europe Cluster

kubectl --context=eur get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hugo-app-federation-svc 100.68.170.252 a9d14dd193504... 80:30718/TCP 1m

kubernetes 100.64.0.1 <none> 443/TCP 26m

Japan Cluster

kubectl --context=jpn get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hugo-app-federation-svc 100.69.172.42 a9d2ce30d3504... 80:32375/TCP 2m

kubernetes 100.64.0.1 <none> 443/TCP 24m

Create the latency-based DNS records

We will now create latency-based DNS CNAME records for the three LoadBalancer Services in your Route 53 domain with a prefix of hugo-fed (e.g., hugo-fed.k8s.kumorilabs.com), using the dns-record-federation.json template file in the repository:

USA Cluster

# Set DNS prefix & the Service name and then retrieve ELB URL for the USA cluster

export DNS_RECORD_PREFIX="hugo-fed"

export SERVICE_NAME="hugo-app-federation-svc"

export USA_HUGO_APP_ELB=$(kubectl --context=${USA_CLUSTER_ALIAS} get svc/${SERVICE_NAME} \

--template="{{range .status.loadBalancer.ingress}} {{.hostname}} {{end}}")

# Add to JSON file

sed -i -e 's|"Name": ".*|"Name": "'"${DNS_RECORD_PREFIX}.${DOMAIN_NAME}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"Region": ".*|"Region": "'"${USA_CLUSTER_AWS_REGION}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"SetIdentifier": ".*|"SetIdentifier": "'"${USA_CLUSTER_AWS_REGION}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"Value": ".*|"Value": "'"${USA_HUGO_APP_ELB}"'"|g' \

scripts/apps/dns-records/dns-record-federation.json

# Create DNS record

aws route53 change-resource-record-sets --hosted-zone-id ${DOMAIN_NAME_ZONE_ID} \

--change-batch file://scripts/apps/dns-records/dns-record-federation.json

Europe Cluster

# Retrieve ELB URL for the Europe cluster

export EUR_HUGO_APP_ELB=$(kubectl --context=${EUR_CLUSTER_ALIAS} get svc/${SERVICE_NAME} \

--template="{{range .status.loadBalancer.ingress}} {{.hostname}} {{end}}")

# Add to JSON file

sed -i -e 's|"Name": ".*|"Name": "'"${DNS_RECORD_PREFIX}.${DOMAIN_NAME}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"Region": ".*|"Region": "'"${EUR_CLUSTER_AWS_REGION}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"SetIdentifier": ".*|"SetIdentifier": "'"${EUR_CLUSTER_AWS_REGION}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"Value": ".*|"Value": "'"${EUR_HUGO_APP_ELB}"'"|g' \

scripts/apps/dns-records/dns-record-federation.json

# Create DNS record

aws route53 change-resource-record-sets --hosted-zone-id ${DOMAIN_NAME_ZONE_ID} \

--change-batch file://scripts/apps/dns-records/dns-record-federation.json

Japan Cluster

# Retrieve ELB URL for the Japan cluster

export JPN_HUGO_APP_ELB=$(kubectl --context=${JPN_CLUSTER_ALIAS} get svc/${SERVICE_NAME} \

--template="{{range .status.loadBalancer.ingress}} {{.hostname}} {{end}}")

# Add to JSON file

sed -i -e 's|"Name": ".*|"Name": "'"${DNS_RECORD_PREFIX}.${DOMAIN_NAME}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"Region": ".*|"Region": "'"${JPN_CLUSTER_AWS_REGION}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"SetIdentifier": ".*|"SetIdentifier": "'"${JPN_CLUSTER_AWS_REGION}"'",|g' \

scripts/apps/dns-records/dns-record-federation.json

sed -i -e 's|"Value": ".*|"Value": "'"${JPN_HUGO_APP_ELB}"'"|g' \

scripts/apps/dns-records/dns-record-federation.json

# Create DNS record

aws route53 change-resource-record-sets --hosted-zone-id ${DOMAIN_NAME_ZONE_ID} \

--change-batch file://scripts/apps/dns-records/dns-record-federation.json

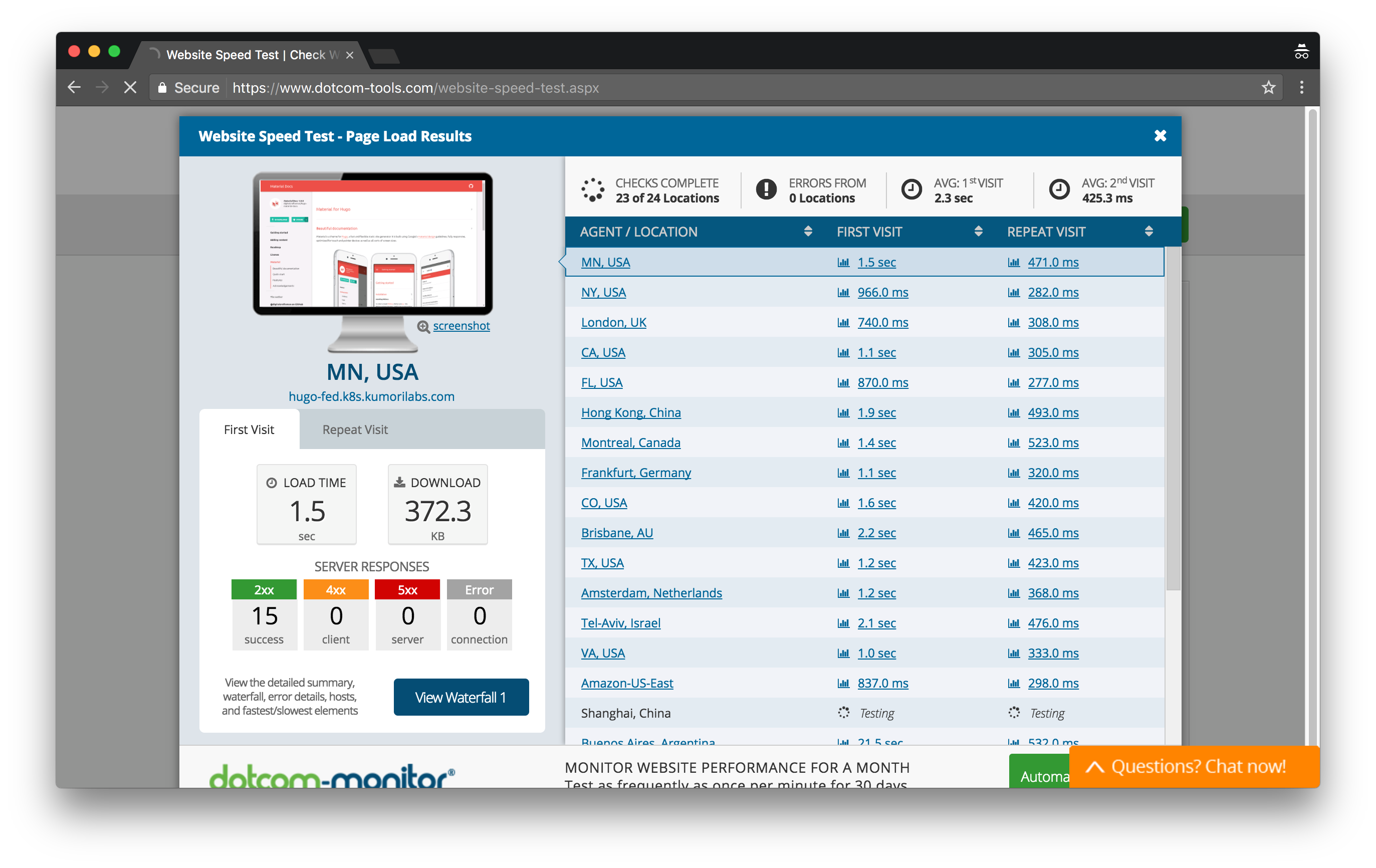

Traffic to the DNS name you just created (e.g., hugo-fed.k8s.kumorilabs.com) will be routed to the nearest cluster (based on the lowest latency).

To verify this, you can use the tool from Dotcom-Monitor to test latency to your site from different parts of the world. And the tool from WebSitePulse can be used to see that the DNS name resolves to different IP addresses when testing from different regions.

Cleanup

Before proceeding to the next lab, delete the three clusters and their associated S3 buckets:

Delete the clusters

Delete the USA cluster and it’s associated S3 bucket:

kops delete cluster ${USA_CLUSTER_FULL_NAME} --state="s3://${USA_CLUSTER_FULL_NAME}-state" --yes

aws s3api delete-bucket --bucket ${USA_CLUSTER_FULL_NAME}-state

Delete the Europe cluster and it’s associated S3 bucket:

kops delete cluster ${EUR_CLUSTER_FULL_NAME} --state="s3://${EUR_CLUSTER_FULL_NAME}-state" --yes

aws s3api delete-bucket --bucket ${EUR_CLUSTER_FULL_NAME}-state

Delete the Japan cluster and it’s associated S3 bucket:

kops delete cluster ${JPN_CLUSTER_FULL_NAME} --state="s3://${JPN_CLUSTER_FULL_NAME}-state" --yes

aws s3api delete-bucket --bucket ${JPN_CLUSTER_FULL_NAME}-state

In addition to the step-by-step instructions provided for each lab, the repository also contains scripts to automate some of the activities being performed in this blog series. See the Using Scripts guide for more details.

Other Labs in the Series

- Introduction: A Blog Series About All Things Kubernetes

- Lab #1: Deploy a Kubernetes Cluster in AWS with Kops

- Lab #2: Maintaining your Kubernetes Cluster

- Lab #3: Creating Deployments & Services in Kubernetes

- Lab #4: Kubernetes Deployment Strategies: Rolling Updates, Canary & Blue-Green

- Lab #5: Setup Horizontal Pod & Cluster Autoscaling in Kubernetes

- Lab #6: Integrating Jenkins and Kubernetes

- Lab #7: Continuous Deployment with Jenkins and Kubernetes

- Lab #8: Continuous Deployment with Travis CI and Kubernetes

- Lab #9: Continuous Deployment with Wercker and Kubernetes