By Steven Eschinger | December 13, 2016

Note: This post has been depreciated and replaced by Lab #6: Integrating Jenkins and Kubernetes in the Getting To Know K8s blog series.

The following guide will first take you through the steps to create a Kubernetes cluster in AWS using Kops, which is a newer solution for easily deploying a production ready Kubernetes cluster on AWS.

Once the Kubernetes cluster is available, we will then deploy Jenkins with the Kubernetes plugin configured to launch Jenkins slaves as Kubernetes pods on-demand.

Warning: Running the commands in this blog post will create AWS resources that may cost you money.

GitHub Repository

The GitHub repository for this demonstration can be found here.

Preparation of Local Environment

This section will guide you through the preparation of your local environment if you chose to provision the cluster from your machine. As an alternative, you can skip ahead to the Using Vagrant section to create a virtual machine with everything you need already installed.

Prerequisites

An AWS account

The AWS CLI installed, configured with a default region, and the security credentials of an IAM user which has the

AdministratorAccesspolicy attached.The

AdministratorAccesspolicy is used for this demo for simplicity purposes. In a production environment, you will want to configure more granular permissions for the IAM user account. For more details, refer to the Setup IAM user section in the Kops repository.Install the AWS CLI:

wget https://s3.amazonaws.com/aws-cli/awscli-bundle.zip unzip awscli-bundle.zip sudo ./awscli-bundle/install -i /usr/local/aws -b /usr/local/bin/awsConfigure the AWS CLI (Method #1 - AWS Configure):

aws configure # Follow instructionsConfigure AWS CLI (Method #2 - ENV Variables):

export AWS_ACCESS_KEY_ID="Your-AWS-IAM-User-Access-Key-ID" export AWS_SECRET_ACCESS_KEY="Your-AWS-IAM-User-Secret-Access-Key" export AWS_DEFAULT_REGION="Your-AWS-Default-Region"An AWS EC2 key pair created and the corresponding public key file on your local machine (default path is:

~/.ssh/id_rsa.pub).A DNS hosted zone created in Route 53 (e.g., yourdomain.com). This is a requirement for Kops to create the Kubernetes cluster. For more details, refer to the Configure DNS section in the Kops repository.

A working Go environment with your

GOPATHset properly.Install Go:

wget https://storage.googleapis.com/golang/go1.8.linux-amd64.tar.gz sudo tar -C /usr/local -xzf go1.8.linux-amd64.tar.gz rm go1.8.linux-amd64.tar.gzSet the required paths for Go:

export PATH=$PATH:/usr/local/go/bin export GOPATH="Your-Go-Projects-Directory" export PATH=$PATH:${GOPATH}/binDocker installed

Git installed

Jq installed

Install Kubectl (1.4.9)

wget https://storage.googleapis.com/kubernetes-release/release/v1.4.9/bin/linux/amd64/kubectl

sudo chmod +x kubectl

sudo mv kubectl /usr/local/bin/kubectl

Install Kops (1.5.1)

wget https://github.com/kubernetes/kops/releases/download/1.5.1/kops-linux-amd64

sudo chmod +x kops-linux-amd64

sudo mv kops-linux-amd64 /usr/local/bin/kops

Clone the repository

git clone https://github.com/smesch/jenkins-with-kubernetes-slaves.git

cd jenkins-with-kubernetes-slaves

Now that your local environment is prepared, you can skip ahead to the Update the Variables Script section.

Using Vagrant

Prerequisites

An AWS account

AWS environment variables set for default region & security credentials of an IAM user which has the

AdministratorAccesspolicy attached.The

AdministratorAccesspolicy is used for this demo for simplicity purposes. In a production environment, you will want to configure more granular permissions for the IAM user account. For more details, refer to the Setup IAM user section in the Kops repository.export AWS_ACCESS_KEY_ID="Your-AWS-IAM-User-Access-Key-ID" export AWS_SECRET_ACCESS_KEY="Your-AWS-IAM-User-Secret-Access-Key" export AWS_DEFAULT_REGION="Your-AWS-Default-Region"An AWS EC2 key pair created and the corresponding public key file on your local machine (default path is:

~/.ssh/id_rsa.pub)A DNS hosted zone created in Route 53 (e.g., yourdomain.com). This is a requirement for Kops to create the Kubernetes cluster. For more details, refer to the Configure DNS section in the Kops repository.

Vagrant and VirtualBox installed

Git installed

Clone the Repository

git clone https://github.com/smesch/jenkins-with-kubernetes-slaves.git

cd jenkins-with-kubernetes-slaves

Vagrantfile

If the local path to your AWS EC2 key pair public key file is not the default (~/.ssh/id_rsa.pub), you will need to update the aws_keypair_pub_key_path variable in the Vagrantfile:

aws_keypair_pub_key_path = "~/.ssh/id_rsa.pub"

Boot and Connect to the Vagrant Virtual Machine

Run the following from the root of the repository where the Vagrantfile is located:

vagrant up

The provisioning of the Vagrant environment will take approximately 5 minutes, during which the following is happening:

The AWS environment variables

$AWS_ACCESS_KEY_ID,$AWS_SECRET_ACCESS_KEY,$AWS_DEFAULT_REGIONdefined on the host are set on the guestThe AWS EC2 key pair public key file is copied from the host to the guest

Git is installed and the repository is cloned on the guest

The

/scripts/provision-vagrant.shscript is run, which installs unzip, jq, go, the AWS CLI, Docker, kubectl and kops

Once the Vagrant virtual machine is started, run the following command to connect to it and then move into the root of the repository:

vagrant ssh

cd jenkins-with-kubernetes-slaves

Update the Variables Script

Once you have setup your local environment or provisioned and logged into your Vagrant environment, you will need to update the /scripts/variables.sh script with your specific details:

# Set values for variables

export AWS_REGION="us-east-1"

export AWS_AVAIL_ZONE="us-east-1b"

export DOMAIN_NAME="k8s.c3group.io"

export CLUSTER_MASTER_SIZE="t2.micro"

export CLUSTER_NODE_SIZE="t2.micro"

export JENKINS_DOCKER_IMAGE="smesch/jenkins-kubernetes-leader-custom:2.32.3"

export AWS_KEYPAIR_PUB_KEY_PATH="~/.ssh/id_rsa.pub"

# Set KOPS_STATE_STORE to S3 bucket name (leave as-is)

export KOPS_STATE_STORE="s3://${DOMAIN_NAME}"

AWS_REGION— AWS region where the Kubernetes cluster will be provisioned (default: us-east-1)AWS_AVAIL_ZONE— AWS availability zone where the Kubernetes cluster will be provisioned (default: us-east-1b)DOMAIN_NAME— Your domain name that is hosted in Route53, where the DNS records for the Kubernetes cluster will be created. This value must be updated with your own domain; otherwise Kops will fail to create the Kubernetes cluster.CLUSTER_MASTER_SIZE— EC2 instance size of the single master instance that will be created for the Kubernetes cluster (default: t2.micro)CLUSTER_NODE_SIZE— EC2 instance size of the two node instances that will be created for the Kubernetes cluster (default: t2.micro)JENKINS_DOCKER_IMAGE— Docker image that will be used for the Jenkins leader. Change this only if you are using a customized Docker image for JenkinsAWS_KEYPAIR_PUB_KEY_PATH— Leave as-is if you are using Vagrant. If running locally, update this if your AWS EC2 key pair public key file is not located at~/.ssh/id_rsa.pubKOPS_STATE_STORE— Leave as-is, will set the S3 bucket url to the domain you specified for the DOMAIN_NAME variable

For the purpose of the demo and to keep the AWS costs down, the default instance size for the master and node instances is t2.micro. In a production environment, you would want to use larger instance sizes.

Create the Kubernetes Cluster

Once you have updated the variables.sh script, you can then proceed to create the Kubernetes cluster by launching the /scripts/create-cluster.sh script. The script must be launched from the root of the repository:

$ ./scripts/create-cluster.sh

{

"Location": "/k8s.c3group.io"

}

I0926 17:56:37.614045 24007 cluster.go:362] Assigned CIDR 172.20.64.0/19 to zone us-east-1b

I0926 17:56:37.944348 24007 cluster.go:338] Using kubernetes latest stable version: v1.3.7

...

...

Contents of the Create-Cluster Script

# Load variables from variables.sh script

source ./scripts/variables.sh

# Create the S3 bucket used for Kubernetes cluster configuration storage

aws s3api create-bucket --region ${AWS_REGION} --bucket ${DOMAIN_NAME}

# Create the Kubernetes cluster

kops create cluster --master-size=${CLUSTER_MASTER_SIZE} --node-size=${CLUSTER_NODE_SIZE} --zones=${AWS_AVAIL_ZONE} --ssh-public-key="${AWS_KEYPAIR_PUB_KEY_PATH}" --kubernetes-version=1.4.9 ${DOMAIN_NAME}

kops update cluster ${DOMAIN_NAME} --yes

- The variables that you defined in the

/scripts/variables.shscript are loaded - The AWS S3 bucket is created with the domain you specified for the

DOMAIN_NAMEvariable - Kops commands are run to provision the Kubernetes cluster

The creation of the Kubernetes cluster will take about 5 - 7 minutes. To verify that the cluster was created successfully, you can run the following command to check that the nodes are reporting a “Ready” status:

$ kubectl get nodes

NAME STATUS AGE

ip-172-20-77-12.ec2.internal Ready,SchedulingDisabled 7m

ip-172-20-78-169.ec2.internal Ready 7m

ip-172-20-78-170.ec2.internal Ready 7m

Deploy Jenkins to the Cluster

Once you have created the cluster and the three instances are reporting a “Ready” status, you can then proceed to deploy the Jenkins leader to the Kubernetes cluster by launching the /scripts/deploy-jenkins.sh script. The script must be launched from the root of the repository:

$ ./scripts/deploy-jenkins.sh

deployment "jenkins-leader" created

persistentvolumeclaim "jenkins-leader-pvc" created

persistentvolume "jenkins-leader-pv" created

service "jenkins-leader-svc" created

The creation of the Jenkins leader will take about 3 minutes.

Contents of the Deploy-Jenkins Script

# Load variables from variables.sh script

source ./scripts/variables.sh

# Check if there is already a volume available in AWS EC2 with a Name tag value of "jenkins-kubernetes-volume"

export JENKINS_KUBERNETES_VOLUME_CHECK=$(aws ec2 describe-volumes --region ${AWS_REGION} --filters Name=tag-key,Values="Name" Name=tag-value,Values="jenkins-kubernetes-volume" --output text --query 'Volumes[*].VolumeId')

# Create an AWS EC2 volume for Jenkins and replace the VolumeID in the jenkins-kubernetes-leader-per-vol.yaml file

if [[ -z "$JENKINS_KUBERNETES_VOLUME_CHECK" ]]; then

export JENKINS_KUBERNETES_VOLUME=$(aws ec2 create-volume --region ${AWS_REGION} --availability-zone ${AWS_AVAIL_ZONE} --size 32 --volume-type gp2 | jq -r ".VolumeId")

aws ec2 create-tags --region ${AWS_REGION} --resources $JENKINS_KUBERNETES_VOLUME --tags Key=Name,Value=jenkins-kubernetes-volume

sed -i -e "s/vol-.*/$JENKINS_KUBERNETES_VOLUME/g" ./kubernetes/jenkins-kubernetes-leader-per-vol.yaml

fi

# Set Jenkins Docker image name in the jenkins-kubernetes-leader-deploy.yaml file

sed -i -e "s|image: .*|image: $JENKINS_DOCKER_IMAGE|g" ./kubernetes/jenkins-kubernetes-leader-deploy.yaml

# Create Kubernetes objects

kubectl create -f ./kubernetes

- The variables that you defined in the

/scripts/variables.shscript are loaded - It checks whether an AWS EBS volume with a name tag of “jenkins-kubernetes-volume” has already been created and is available

- If no AWS EBS volume with a name tag of “jenkins-kubernetes-volume” is available, it will create it

- This volume will be used as the persistent volume for the Jenkins leader, which will ensure that the Jenkins configuration is not lost if the Jenkins leader pod in Kubernetes crashes or is rescheduled on another node

- The AWS EBS VolumeID is written to the

/kubernetes/jenkins-kubernetes-leader-per-vol.yamlKubernetes manifest, which will be used to define the persistent volume for the Jenkins leader - The Docker image that you specified for the

JENKINS_DOCKER_IMAGEvariable is written to the/kubernetes/jenkins-kubernetes-leader-deploy.yamlKubernetes manifest - The Kubernetes manifests for the Jenkins leader, which are located in the /kubernetes/ directory, will be applied to the cluster

Kubernetes Manifests

The resources specified in the following Kubernetes manifests will be created for the Jenkins leader:

jenkins-kubernetes-leader-per-vol.yaml: Creates a persistent volume that is backed by the AWS EBS volume that was created by thedeploy-jenkins.shscriptjenkins-kubernetes-leader-per-vol-claim.yaml: Creates a persistent volume claim and assigns it to the Jenkins leader deploymentjenkins-kubernetes-leader-deploy.yaml: Creates the Jenkins leader deployment, which specifies which Docker image to run (specified in thevariables.shscript), the ports to be exposed (8080 for the web UI and 50000 for Jenkins slave communication), and the directory (/var/jenkins_home) to mount in the persistent volumejenkins-kubernetes-leader-svc.yaml: Creates a LoadBalancer type service which exposes ports 80 (proxied to 8080) & 50000 externally

Using Jenkins

Accessing the Jenkins Web UI

To obtain the URL for the Jenkins Web UI, run the following command, copy the value for “LoadBalancer Ingress”, and paste it into a browser.

$ kubectl describe svc jenkins-leader-svc

Name: jenkins-leader-svc

Namespace: default

Labels: run=jenkins-leader

Selector: run=jenkins-leader

Type: LoadBalancer

IP: 100.64.46.174

LoadBalancer Ingress: a6bea4c3a841511e6ac380ecbb58edf9-27725344.us-east-1.elb.amazonaws.com

Port: http 80/TCP

NodePort: http 32425/TCP

Endpoints: 100.96.1.3:8080

Port: slave 50000/TCP

NodePort: slave 31510/TCP

Endpoints: 100.96.1.3:50000

Session Affinity: None

No events.

Warning: Jenkins authentication is turned off for this demo for simplicity purposes. In a production environment, you will want to configure authentication for Jenkins.

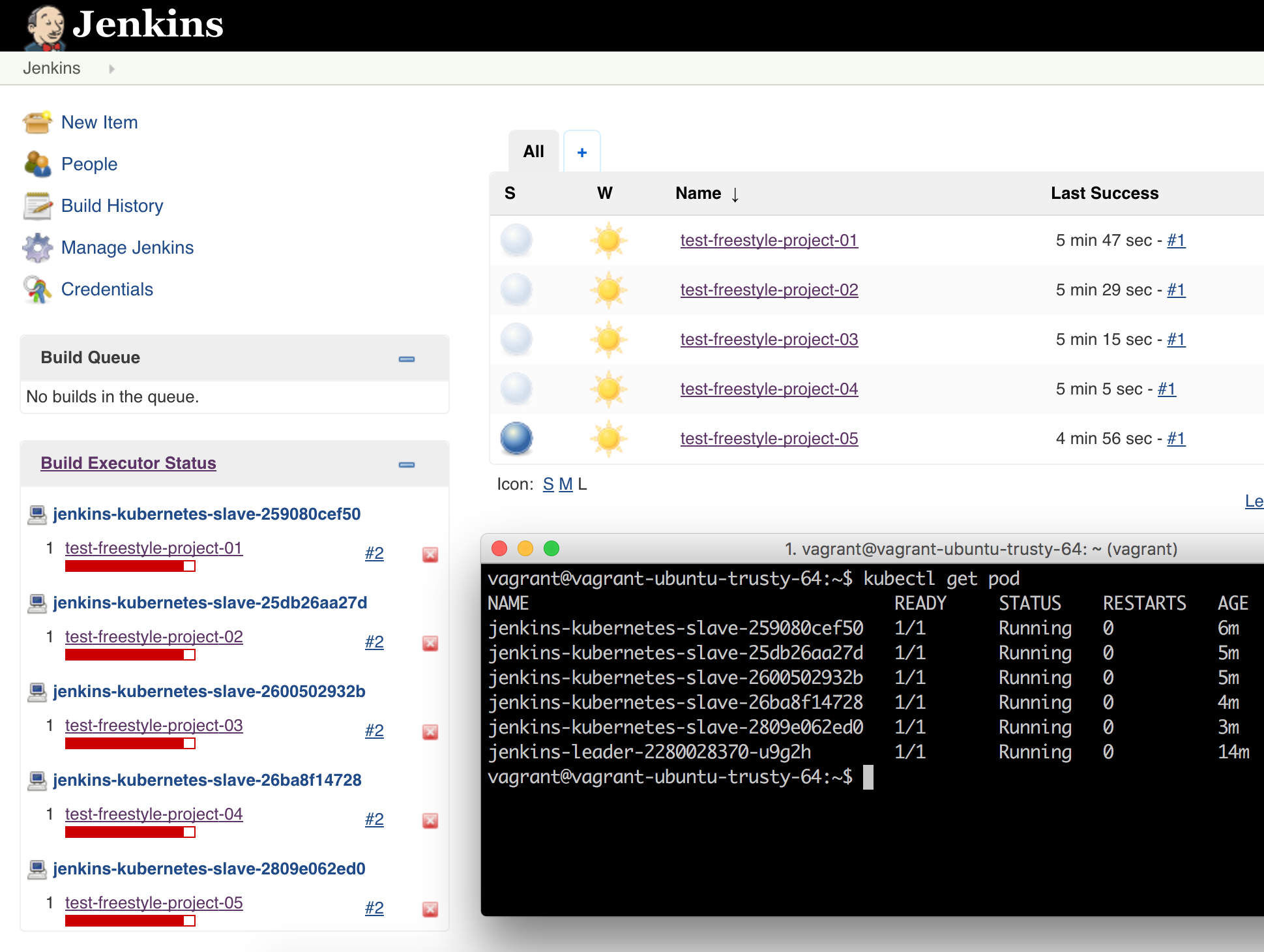

Run the Test Job in Jenkins

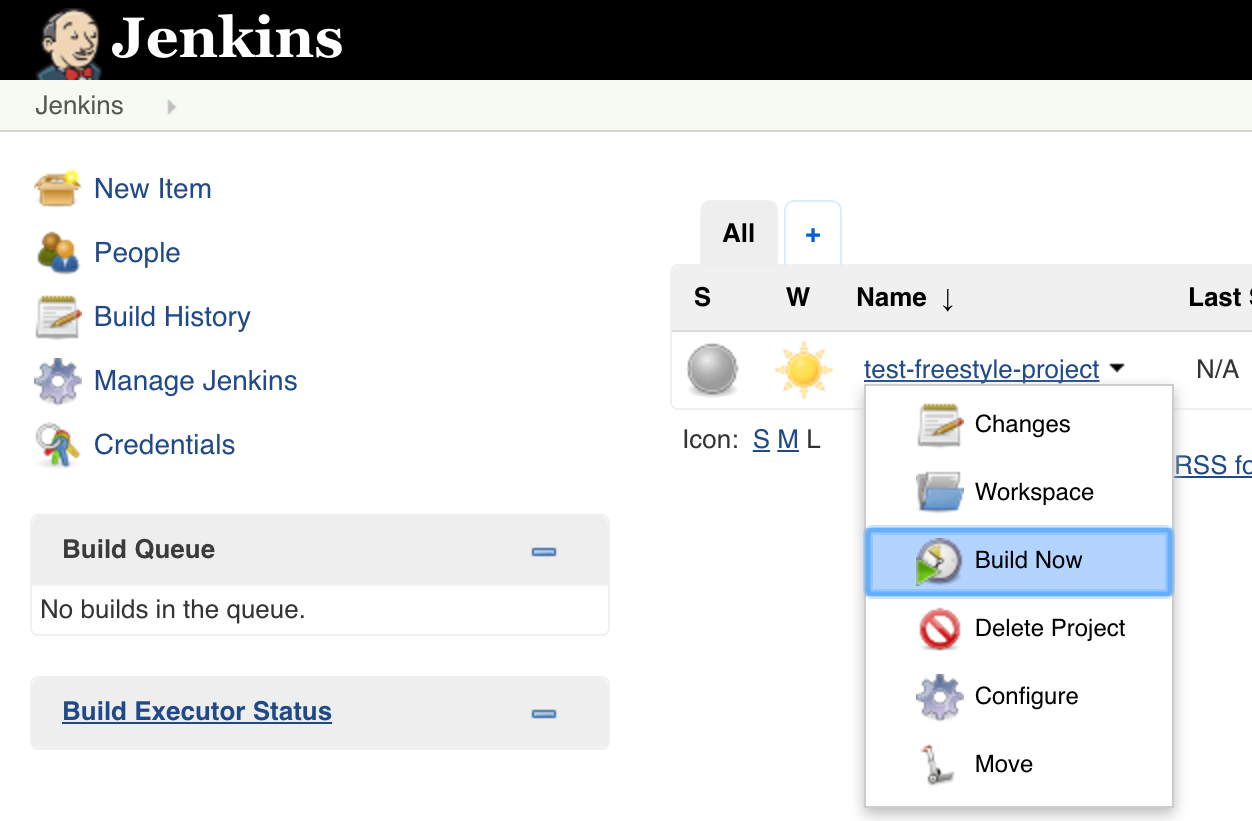

To verify that the Kubernetes plugin is working properly, you can run the test job named “test-freestyle-project” that is included with the Jenkins Docker image. To run the job, click the arrow next to the job name on the dashboard and choose “Build Now”:

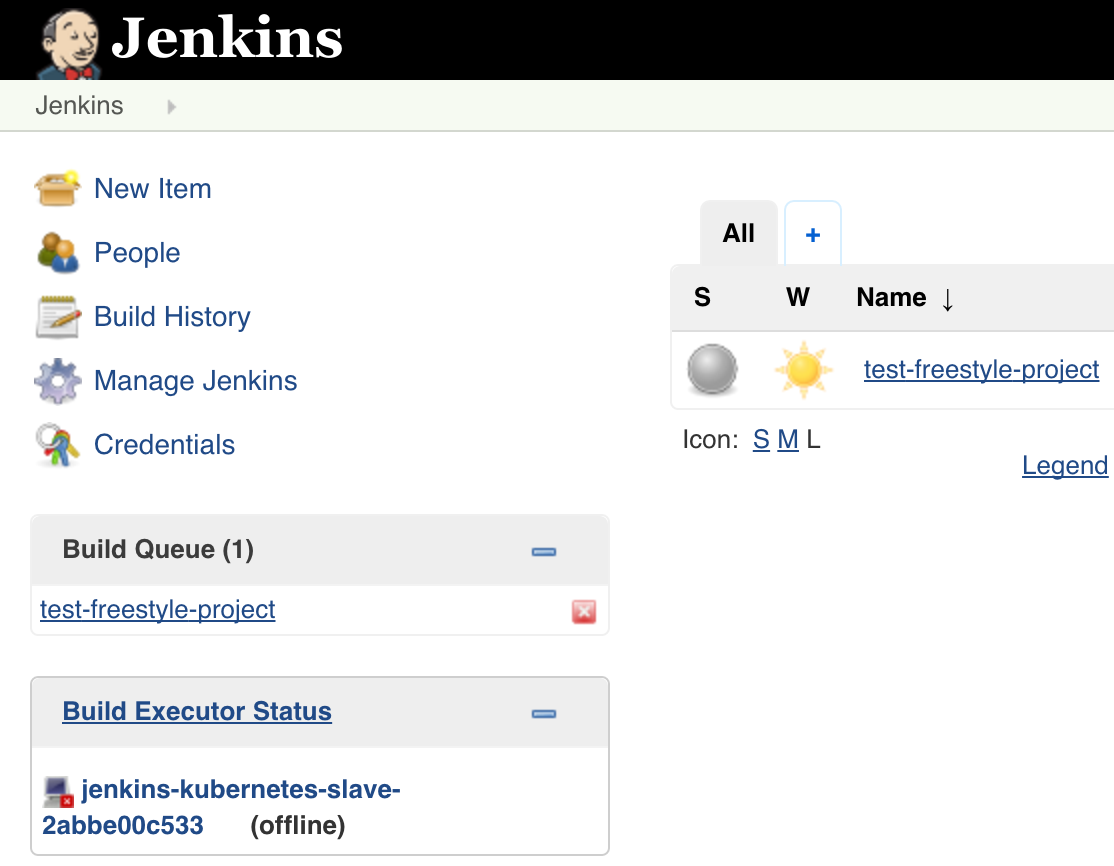

Shortly after you start the job, you should see a new Jenkins slave being created in the “Build Executor Status” section on the dashboard:

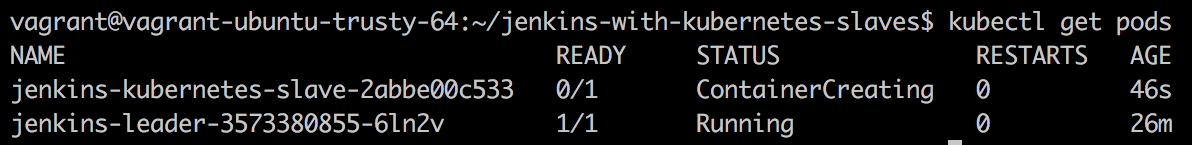

You can also see the corresponding Kubernetes pod getting created by running “kubectl get pods”:

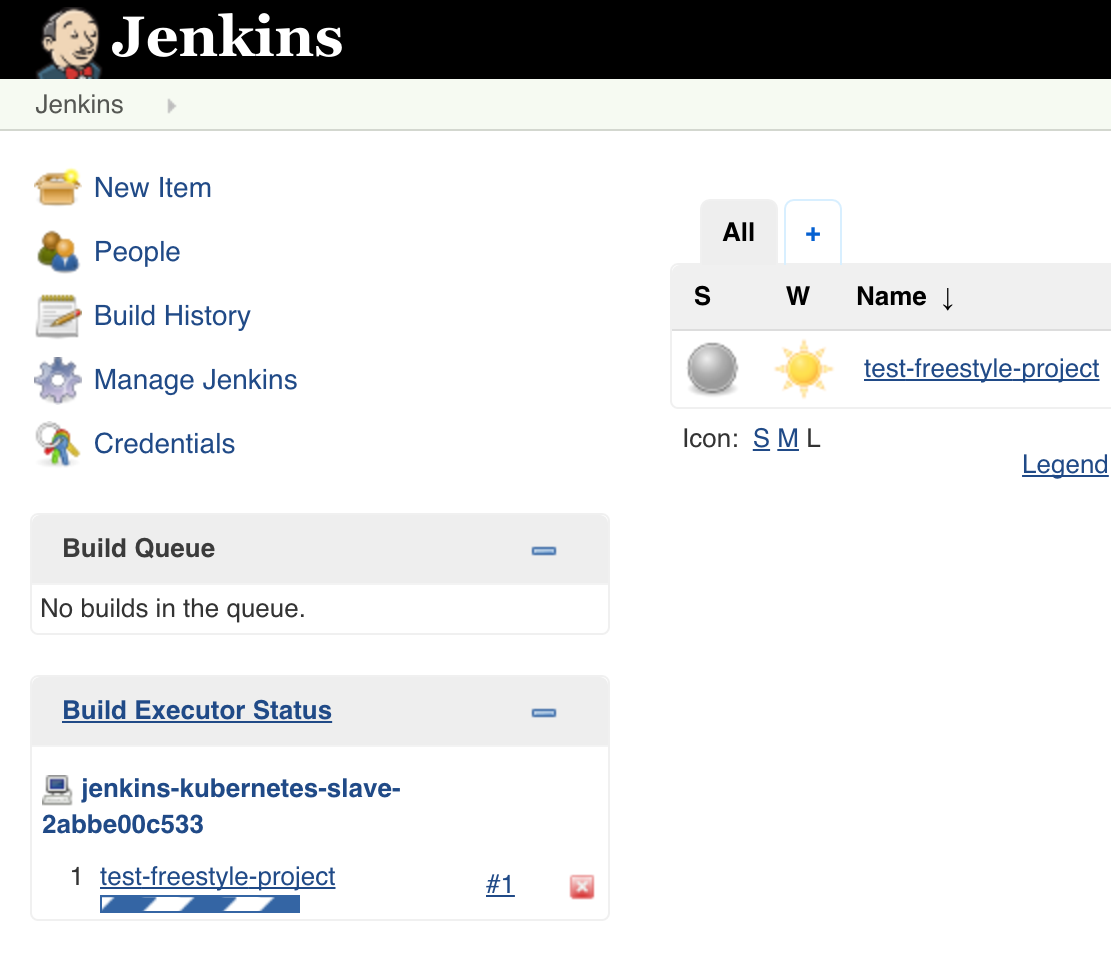

Finally, you will see the newly created Jenkins slave running the job in the “Build Executor Status” section on the dashboard:

Delete the Kubernetes Cluster

You can delete the Kubernetes cluster by launching the /scripts/delete-cluster.sh script. The script must be launched from the root of the repository:

$ ./scripts/delete-cluster.sh

TYPE NAME ID

autoscaling-config master-us-east-1b.masters.k8s.c3group.io-20160930144921 master-us-east-1b.masters.k8s.c3group.io-20160930144921

autoscaling-config nodes.k8s.c3group.io-20160930145013 nodes.k8s.c3group.io-20160930145013

autoscaling-group master-us-east-1b.masters.k8s.c3group.io master-us-east-1b.masters.k8s.c3group.io

...

...

Contents of the Delete-Cluster Script

# Load variables from variables.sh script

source ./scripts/variables.sh

# Delete the Kubernetes cluster

kops delete cluster ${DOMAIN_NAME} --yes

# Wait for Kubernetes cluster instances to be fully terminated

sleep 60

# Delete the persistent volume used for Jenkins

export JENKINS_KUBERNETES_VOLUME=$(aws ec2 describe-volumes --region ${AWS_REGION} --filters Name=tag-key,Values="Name" Name=tag-value,Values="jenkins-kubernetes-volume" --output text --query 'Volumes[*].VolumeId')

aws ec2 delete-volume --region ${AWS_REGION} --volume-id $JENKINS_KUBERNETES_VOLUME

# Delete the S3 bucket used for Kubernetes cluster configuration storage

aws s3api delete-bucket --region ${AWS_REGION} --bucket ${DOMAIN_NAME}

- The variables that you defined in the

/scripts/variables.shscript are loaded Kops delete clusteris run to delete the Kubernetes cluster- It pauses for 60 seconds for the Kubernetes cluster instances to be fully terminated

- The AWS EC2 VolumeID of the Jenkins leader persistent volume is obtained and then used to delete the volume

- The AWS S3 bucket is deleted